Last Updated: 2023-03-08

Helm

Helm helps you manage Kubernetes applications — Helm Charts help you define, install, and upgrade even the most complex Kubernetes application.

For a typical cloud-native application with a 3-tier architecture, the diagram below illustrates how it might be described in terms of Kubernetes objects. In this example, each tier consists of a Deployment and Service object, and may additionally define ConfigMap or Secret objects. Each of these objects are typically defined in separate YAML files, and are fed into the kubectl command line tool.

A Helm chart encapsulates each of these YAML definitions, provides a mechanism for configuration at deploy-time and allows you to define metadata and documentation that might be useful when sharing the package. Helm can be useful in different scenarios:

- Find and use popular software packaged as Kubernetes charts

- Share your own applications as Kubernetes charts

- Create reproducible builds of your Kubernetes applications

- Intelligently manage your Kubernetes object definitions

- Manage releases of Helm packages

What you'll build

In this codelab, you're going to deploy a simple application using a Helm chart to your Kubernetes.

What you'll need

- A recent version of your favorite Web Browser

- Basics of BASH

- AWS Account

Before you can use Kubernetes to deploy your application, you need a cluster of machines to deploy them to. The cluster abstracts the details of the underlying machines you deploy to the cluster.

Machines can later be added, removed, or rebooted and containers are automatically distributed or re-distributed across whatever machines are available in the cluster. Machines within a cluster can be set to autoscale up or down to meet demand. Machines can be located in different zones for high availability.

Open Cloud Shell

You will do some of the work from the Amazon Cloud Shell, a command line environment running in the Cloud. This virtual machine is loaded with all the development tools you'll need (aws cli, python) and offers a persistent 1GB home directory and runs in AWS, greatly enhancing network performance and authentication. Open the Amazon Cloud Shell by clicking on the icon on the top right of the screen:

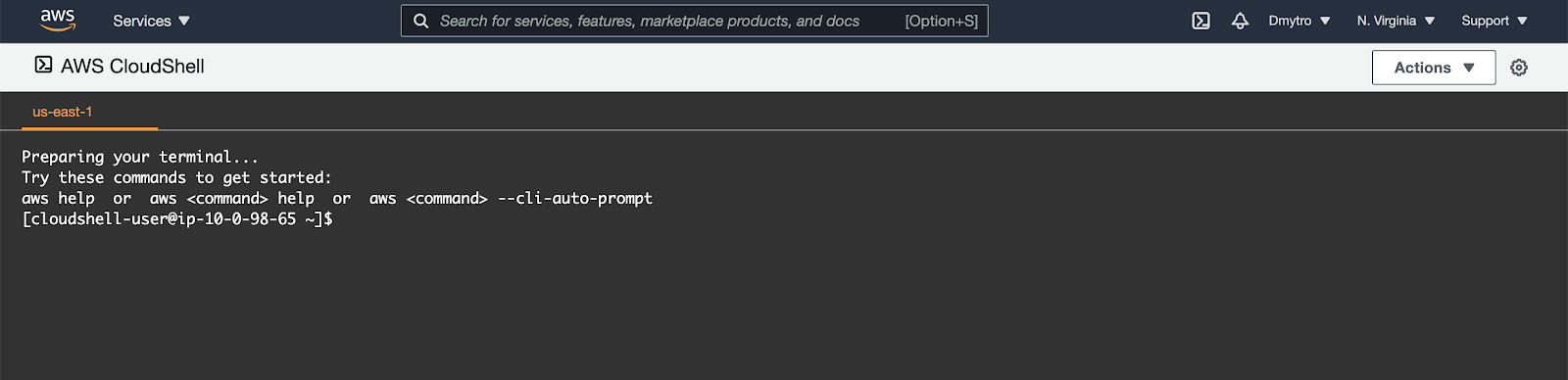

You should see the shell prompt open in the new tab:

Initial setup

Before creating a cluster, you must install and configure the following tools:

kubectl– A command line tool for working with Kubernetes clusters.eksctl– A command line tool for working with EKS clusters that automates many individual tasks.

To install eksctl, run the following:

$ curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" |\

tar xz -C /tmp

$ sudo mv -v /tmp/eksctl /usr/local/binConfirm the eksctl command works:

$ eksctl versionTo install kubectl, run the following:

$ curl -Lo kubectl https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl

$ chmod +x ./kubectl

$ sudo mv ./kubectl /usr/local/bin/kubectlCreate a Cluster

Using eksctl you can create a cluster by simply running the eksctl create cluster command and supplying all the required flags. But as there are many configuration options the command can become very large. Instead, we can create a YAML file with all the options and supply it as input (replacing your_name_here with your name):

$ export ACCOUNT_ID=`aws sts get-caller-identity --query "Account" --output text`

$ export YOUR_NAME=your_name_here

$ cat << EOF > ekscfg.yaml

---

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: ${YOUR_NAME}-eks

region: us-east-1

iam:

serviceRolePermissionsBoundary: "arn:aws:iam::${ACCOUNT_ID}:policy/CustomPowerUserBound"

managedNodeGroups:

- name: nodegroup

desiredCapacity: 3

instanceType: t2.small

ssh:

allow: false

iam:

instanceRolePermissionsBoundary: "arn:aws:iam::${ACCOUNT_ID}:policy/CustomPowerUserBound"

cloudWatch:

clusterLogging:

enableTypes: ["api", "audit", "controllerManager"]

availabilityZones: ['us-east-1a', 'us-east-1b', 'us-east-1c', 'us-east-1d']

EOFNext, create the cluster:

$ eksctl create cluster -f ekscfg.yamlInstall Helm

Start by installing the helm command line client.

$ wget https://get.helm.sh/helm-v3.11.2-linux-amd64.tar.gz

$ tar -zxvf helm-v3.11.2-linux-amd64.tar.gz

$ sudo mv linux-amd64/helm /usr/local/bin/helmCreate Example Kubernetes Manifest

Writing a Helm Chart is easier when you're starting with an existing set of Kubernetes manifests.

Create and change to the demo directory.

$ mkdir ~/demo-charts/

$ cd ~/demo-charts/Let's create working manifests 😁 Create a deployment.yaml:

$ sudo yum install nano -y

$ nano deployment.yamlAnd paste the following:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: example

name: example

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: example

template:

metadata:

labels:

app: example

spec:

containers:

- image: nginx:1.19.4-alpine

imagePullPolicy: IfNotPresent

name: nginx

resources: {}Same with the service.yaml. Create it:

$ nano service.yamlAnd paste the following:

apiVersion: v1

kind: Service

metadata:

labels:

app: example

name: example

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: example

type: LoadBalancerDeploy it into the cluster:

$ kubectl create -f ~/demo-charts/Verify that the NGINX is working (might need to wait a minute or two):

$ NGINX_IP=$(kubectl get -o jsonpath="{.status.loadBalancer.ingress[0].hostname}" svc example)

$ curl $NGINX_IPIf all is going well you should be able to hit the provided URL and get the "Welcome to nginx!" page. We can use these manifests to bootstrap our helm charts:

$ tree ~/demo-charts

/home/user/demo-charts

├── deployment.yaml

└── service.yamlBefore we move on we should clean up our environment:

$ kubectl delete -f ~/demo-charts

deployment.apps "example" deleted

service "example" deletedGenerate the Chart

The best way to get started with a new chart is to use the helm create command to scaffold out an example we can build on. Use this command to create a new chart named mychart in our demo directory:

$ helm create mychartHelm will create a new directory called mychart with the structure shown below. Let's find out how it works.

$ tree mychart/

mychart/

|-- charts

|-- Chart.yaml

|-- templates

| |-- deployment.yaml

| |-- _helpers.tpl

| |-- hpa.yaml

| |-- ingress.yaml

| |-- NOTES.txt

| |-- serviceaccount.yaml

| |-- service.yaml

| `-- tests

| `-- test-connection.yaml

`-- values.yamlHelm created a number of files and directories.

Chart.yaml- the metadata for your Helm Chart.values.yaml- values that can be used as variables in your templates.templates/*.yaml- Example Kubernetes manifests._helpers.tpl- helper functions that can be used inside the templates.templates/NOTES.txt- templated notes that are displayed on Chart install.

As mentioned earlier, a Helm chart consists of metadata that is used to help describe what the application is, define constraints on the minimum required Kubernetes and/or Helm version and manage the version of your chart. All of this metadata lives in the Chart.yaml file. The Helm documentation describes the different fields for this file.

Edit Chart.yaml:

$ vi mychart/Chart.yamlAnd change it so that it looks like this:

apiVersion: v2

name: mychart

description: NGINX chart for Kubernetes

type: application

version: 0.1.0

appVersion: 1.19.4-alpineRemove the currently unused *.yaml and NOTES.txt files and copy our example Kubernetes manifests to the templates folder.

$ rm ~/demo-charts/mychart/templates/*.yaml

$ rm ~/demo-charts/mychart/templates/NOTES.txt

$ cp ~/demo-charts/*.yaml ~/demo-charts/mychart/templates/The file structure should look like this now:

$ tree mychart/

mychart/

├── charts

├── Chart.yaml

├── templates

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── service.yaml

│ └── tests

│ └── test-connection.yaml

└── values.yamlTemplates

The most important piece of the puzzle is the templates/ directory. This is where Helm finds the YAML definitions for your Services, Deployments and other Kubernetes objects. We already have replaced the generated YAML files for our own. What you end up with is a working chart that can be deployed using the helm install command.

It's worth noting however, that the directory is named templates, and Helm runs each file in this directory through a Go template rendering engine. Helm extends the template language, adding a number of utility functions for writing charts.

There are a number of built in variables we can use in our templates. Before we try them, edit the values.yaml:

$ rm ~/demo-charts/mychart/values.yaml

$ vi ~/demo-charts/mychart/values.yamlAnd paste the following:

replicaCount: 1

image:

repository: nginx

pullPolicy: IfNotPresent

service:

type: ClusterIP

port: 80

resources:

limits:

cpu: 100m

memory: 128Mi

requests:

cpu: 100m

memory: 128MiNow we can use a number of built-in objects to customize our manifests. First, open the service.yaml:

$ vi ~/demo-charts/mychart/templates/service.yamlAs we now have some parameters inside our values.yaml file, we can use the built-in .Values object to access them in the template. The .Values object is a key element of Helm charts, used to expose configuration that can be set at the time of deployment (the defaults for this object are defined in the values.yaml). The use of templating can greatly reduce boilerplate and simplify your definitions. Add the Values.service.port and Values.service.type variables to our template:

apiVersion: v1

kind: Service

metadata:

labels:

app: example

name: example

namespace: default

spec:

ports:

- port: {{ .Values.service.port }}

protocol: TCP

targetPort: 80

selector:

app: example

type: {{ .Values.service.type }}Save the file. To see if the variables are working, we can run helm template to run the templating engine and generate manifests. But it will generate a manifest for every file in the templates folder. To limit it to a specific file we can use a -s flag:

$ helm template mychart/ -s templates/service.yaml

---

# Source: mychart/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: example

name: example

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: example

type: ClusterIPYou should see the port and type substituted by the variables in values.yaml.

Next, our service has its name hard-coded to "example". If we use helm to deploy this chart multiple times in the same namespace - we will rewrite this configuration. To make every object unique during a helm install we can make use of partials defined in _helpers.tpl, as well as functions like replace. The Helm documentation has a deeper walkthrough of the templating language, explaining how functions, partials and flow control can be used when developing your chart. We can include a partial called mychart.fullname, which will generate a name consisting of chat name and release name. To add it - modify the service.yaml:

apiVersion: v1

kind: Service

metadata:

labels:

app: example

name: {{ include "mychart.fullname" . }}

namespace: default

spec:

ports:

- port: {{ .Values.service.port }}

protocol: TCP

targetPort: 80

selector:

app: example

type: {{ .Values.service.type }}Once again, we have hard-coded label selectors (and labels for that matter), which will cause problems if we install it multiple times. To fix it, for example, we can use a built-in Release object, that contains information about current release, and use its name as a label & selector (we'll do the same in deployment later).

apiVersion: v1

kind: Service

metadata:

labels:

app: {{ .Release.Name }}

name: {{ include "mychart.fullname" . }}

namespace: default

spec:

ports:

- port: {{ .Values.service.port }}

protocol: TCP

targetPort: 80

selector:

app: {{ .Release.Name }}

type: {{ .Values.service.type }}If we run the template again, we'll see that the variable is substituted by the RELEASE-NAME placeholder.

$ helm template mychart/ -s templates/service.yaml

---

# Source: mychart/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: RELEASE-NAME

name: RELEASE-NAME-mychart

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: RELEASE-NAME

type: ClusterIPIt happens because a release name is chosen (or generated) during an installation process and is not available right now. We can fix it by using additional flag, but instead, we can do a dry-run of a helm install and enable debug to inspect the generated definitions:

$ helm install --dry-run --debug test ./mychart

NAME: test

(...)

COMPUTED VALUES: ...

(...)

---

# Source: mychart/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: test

name: test-mychart

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: test

type: ClusterIP

---

(...)Also, if a user of your chart wanted to change the default configuration, they could provide overrides directly on the command-line:

$ helm install --dry-run --debug test ./mychart --set service.port=8080

(...)

---

# Source: mychart/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: test

name: test-mychart

namespace: default

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 80

selector:

app: test

type: ClusterIP

---

(...)For more advanced configuration, a user can specify a YAML file containing overrides with the --values option.

Next, let's modify our deployment to use the rest of the variables as well as showcase additional functions and pipelines. Also, the .Chart object provides metadata about the chart to your definitions such as the name, or version.

Edit the deployment.yaml:

$ vi ~/demo-charts/mychart/templates/deployment.yamlAnd edit it to look like this:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: {{ .Release.Name }}

name: {{ include "mychart.fullname" . }}

namespace: default

spec:

replicas: {{ .Values.replicaCount }}

selector:

matchLabels:

app: {{ .Release.Name }}

template:

metadata:

labels:

app: {{ .Release.Name }}

spec:

containers:

- image: "{{ .Values.image.repository }}:{{ .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

name: {{ .Chart.Name }}

resources:

{{- toYaml .Values.resources | nindent 10 }}Run the dry run again and check if the templating is working:

$ helm install --dry-run --debug test ./mychart

(...)

# Source: mychart/templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: test

name: test-mychart

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: test

template:

metadata:

labels:

app: test

spec:

containers:

- image: "nginx:1.19.4-alpine"

imagePullPolicy: IfNotPresent

name: mychart

resources:

limits:

cpu: 100m

memory: 128Mi

requests:

cpu: 100m

memory: 128MiDocumentation

Another useful file in the templates/ directory is the NOTES.txt file. This is a templated, plaintext file that gets printed out after the chart is successfully deployed. As we'll see when we deploy our first chart, this is a useful place to briefly describe the next steps for using a chart. Since NOTES.txt is run through the template engine, you can use templating to print out working commands for obtaining an IP address, or getting a password from a Secret object.

Let's create a new NOTES.txt:

$ vi mychart/templates/NOTES.txtAnd paste the following:

Get the application URL by running these commands:

{{- if contains "LoadBalancer" .Values.service.type }}

It may take a few minutes for the LoadBalancer IP to be available.

export SERVICE_IP=$(kubectl get svc --namespace {{ .Release.Namespace }} {{ include "mychart.fullname" . }} --template "{{"{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}"}}")

curl http://$SERVICE_IP:{{ .Values.service.port }}

{{- else if contains "ClusterIP" .Values.service.type }}

export POD_NAME=$(kubectl get pods --namespace {{ .Release.Namespace }} -l "app={{ .Release.Name }}" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace {{ .Release.Namespace }} port-forward $POD_NAME 8080:80

{{- else }}

Please use either LoadBalancer or ClusterIP Service type.

{{- end }}Install the application

The chart you created in the previous step is set up to run an NGINX server exposed via a Kubernetes Service. By default, the chart will create a ClusterIP type Service, so NGINX will only be exposed internally in the cluster. To access it externally, we'll use the LoadBalancer type instead. We can also set the name of the Helm release so we can easily refer back to it. Let's go ahead and deploy our NGINX chart using the helm install command:

$ helm install example ./mychart --set service.type=LoadBalancer

NAME: example

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

Get the application URL by running these commands:

It may take a few minutes for the LoadBalancer IP to be available.

export SERVICE_IP=$(kubectl get svc --namespace default example-mychart --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")

echo http://$SERVICE_IP:80The output of helm install displays a handy summary of the state of the release, what objects were created, and the rendered NOTES.txt file to explain what to do next. Run the commands in the output to get a URL to access the NGINX service and curl it (you probably need to wait 1-2 minutes for LoadBalancer to get its IP address).

$ export SERVICE_IP=$(kubectl get svc --namespace default example-mychart --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")

$ curl http://$SERVICE_IP:80/If all went well, you should see the HTML output of a NGINX welcome page. Congratulations! You've just deployed your very first service packaged as a Helm chart!

Let's check what resources we have currently deployed:

$ helm get manifest example | kubectl get -f -

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/example-mychart LoadBalancer 10.4.x.xxx xx.xxx.xxx.xx 80:xxxxx/TCP 2m47s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/example-mychart 1/1 1 1 2m47sPackage

So far, we've been using the helm install command to install a local, unpacked chart. However, if you are looking to share your charts with your team or the customer, your consumers will typically install the charts from a tar package. We can use helm package to create the tar package:

$ helm package ./mychartHelm will create a mychart-0.1.0.tgz package in our working directory, using the name and version from the metadata defined in the Chart.yaml file. A user can install from this package instead of a local directory by passing the package as the parameter to helm install.

$ helm install example2 mychart-0.1.0.tgz --set service.type=LoadBalancerRepositories

In order to make it much easier to share packages, Helm has built-in support for installing packages from an HTTP server. Helm reads a repository index hosted on the server which describes what chart packages are available and where they are located.

We can use the ChartMuseum opensource Helm repository server to run a local repository to serve our chart. It can use different storage backends (S3, GCS, etc.), but we'll use local storage as an example. First, install the ChartMuseum:

$ curl -LO https://s3.amazonaws.com/chartmuseum/release/latest/bin/linux/amd64/chartmuseum

$ chmod +x ./chartmuseum

$ sudo mv ./chartmuseum /usr/local/binNext, run the following command to launch our server in the current shell:

$ chartmuseum --port=8080 \

--storage="local" \

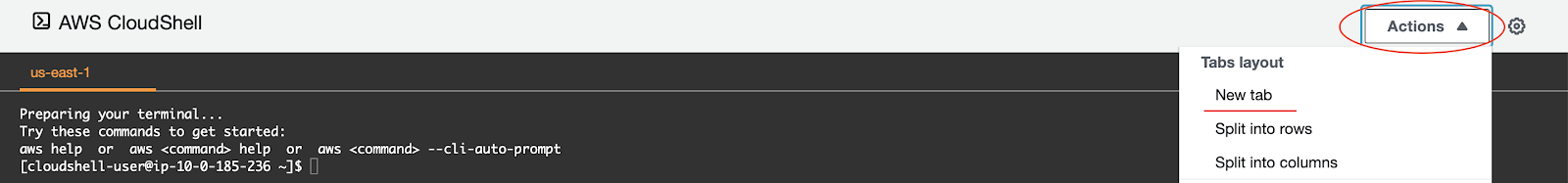

--storage-local-rootdir="./chartstorage"Now you can upload the Chart to the repository. Open a new Cloud Shell tab and run:

$ cd ~/demo-charts

$ curl --data-binary "@mychart-0.1.0.tgz" http://localhost:8080/api/charts

{"saved":true}To install the chart from repository we need to add it to Helm repository list and index it:

$ helm repo add local http://localhost:8080

$ helm repo updateNow you should be able to see your chart in the local repository and install it from there:

$ helm search repo local

NAME CHART VERSION APP VERSION DESCRIPTION

local/mychart 0.1.0 1.19.4-alpine NGINX chart for Kubernetes

$ helm install example3 local/mychart --set service.type=LoadBalancerTo set up a remote repository you can follow the guide in the Helm documentation.

Dependencies

As the applications that you're packaging as charts increase in complexity, you might find you need to pull in a dependency such as a database. Helm allows you to specify sub-charts that will be created as part of the same release. To define a dependency, edit the Chart.yaml file in the chart root directory:

$ nano mychart/Chart.yamlAnd add the following:

dependencies:

- name: mariadb

version: 9.1.4

repository: https://charts.bitnami.com/bitnamiMuch like a runtime language dependency file (such as Python's requirements.txt), the dependencies list in Chart.yaml file allows you to manage your chart's dependencies and their versions. When updating dependencies, a lockfile is generated so that subsequent fetching of dependencies uses a known, working version. Run the following command to pull in the MariaDB dependency we defined:

$ helm dep update ./mychart

...

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 1 charts

Downloading mariadb from repo https://charts.bitnami.com/bitnami

Deleting outdated chartsHelm has found a matching version in the bitnami repository and has fetched it into my chart's sub-chart directory.

$ ls ./mychart/charts

mariadb-9.1.4.tgzNow when we go and install the chart, we'll see that MariaDB's objects are created too. Also, we are able to pass values overrides for dependencies the same way we pass value overrides for our chart:

$ helm install example4 ./mychart --set service.type=LoadBalancer --set mariadb.auth.rootPassword=verysecret

$ helm get manifest example4 | kubectl get -f -Check if the password override worked:

$ echo `kubectl get secret example4-mariadb -o=jsonpath="{.data.mariadb-root-password}" | base64 -d`Delete a Release

To list all the installed releases you can run:

$ helm lsNow, you can delete every release by name:

for name in "example" "example2" "example3" "example4"

do

/usr/local/bin/helm uninstall $name

doneDelete the cluster and all node groups

$ eksctl delete cluster \

--config-file=ekscfg.yamlWhen the cluster is removed - delete the demo folder.

$ rm -r ~/demo-chartsDelete the local Helm repository:

$ helm repo remove localThank you! :)