Last Updated: 2023-03-26

Jenkins

Jenkins is a free and open source automation server. It helps automate the parts of software development related to building, testing, and deploying, facilitating continuous integration and continuous delivery. It is a server-based system that runs in servlet containers such as Apache Tomcat.

It supports version control tools, including AccuRev, CVS, Subversion, Git, Mercurial, Perforce, ClearCase and RTC, and can execute Apache Ant, Apache Maven and sbt based projects as well as arbitrary shell scripts and Windows batch commands.

What you'll build

In this codelab, you're going to deploy a Jenkins instance to Kubernetes. Then you will configure Jenkins to build and deploy a small application to Kubernetes using Helm.

What you'll need

- A recent version of your favorite Web Browser

- Basics of BASH

- AWS Account

Before you can use Kubernetes to deploy your application, you need a cluster of machines to deploy them to. The cluster abstracts the details of the underlying machines you deploy to the cluster.

Machines can later be added, removed, or rebooted and containers are automatically distributed or re-distributed across whatever machines are available in the cluster. Machines within a cluster can be set to autoscale up or down to meet demand. Machines can be located in different zones for high availability.

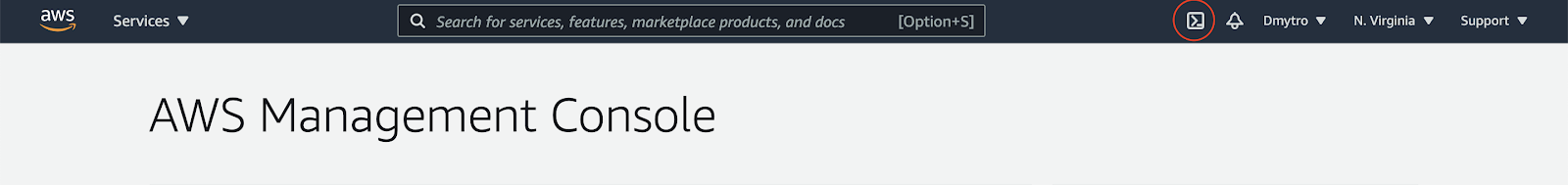

Open Cloud Shell

You will do some of the work from the Amazon Cloud Shell, a command line environment running in the Cloud. This virtual machine is loaded with all the development tools you'll need (aws cli, python) and offers a persistent 1GB home directory and runs in AWS, greatly enhancing network performance and authentication. Open the Amazon Cloud Shell by clicking on the icon on the top right of the screen:

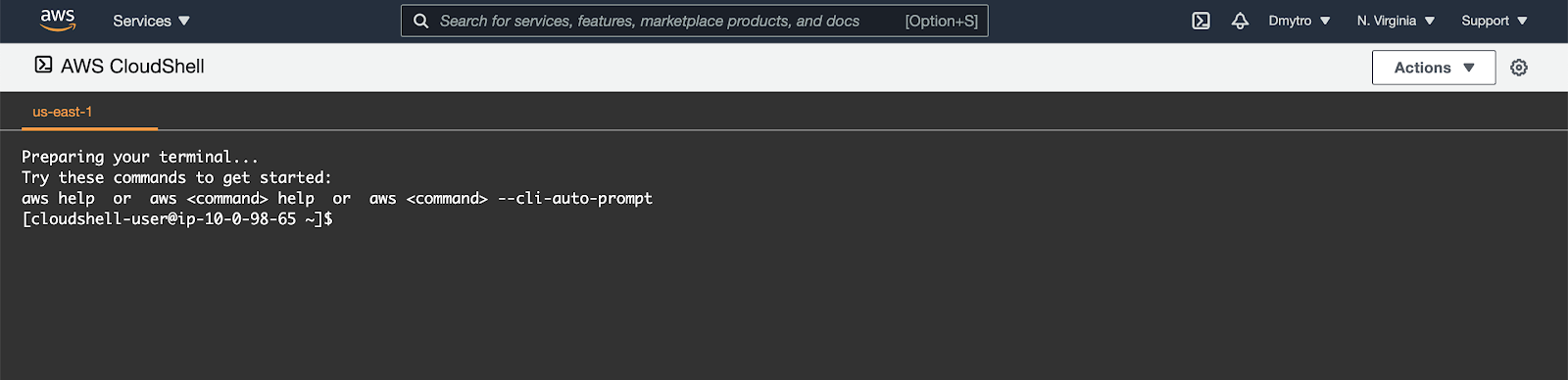

You should see the shell prompt open in the new tab:

Initial setup

Before creating a cluster, you must install and configure the following tools:

kubectl– A command line tool for working with Kubernetes clusters.kops– A command line tool for Kubernetes clusters in the AWS Cloud.

To install kops, run the following:

$ curl -Lo kops https://github.com/kubernetes/kops/releases/download/$(curl -s https://api.github.com/repos/kubernetes/kops/releases/latest | grep tag_name | cut -d '"' -f 4)/kops-linux-amd64

$ chmod +x ./kops

$ sudo mv ./kops /usr/local/bin/Confirm the kops command works:

$ kops versionTo install kubectl, run the following:

$ curl -Lo kubectl https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl

$ chmod +x ./kubectl

$ sudo mv ./kubectl /usr/local/bin/kubectlkops needs a state store to hold the configuration for your clusters. The simplest configuration for AWS is to store it in a S3 bucket in the same account, so that's how we'll start.

Create an empty bucket, replacing YOUR_NAME with your name 😁:

$ export REGION=us-east-1

$ export STATE_BUCKET=YOUR_NAME-state-store

# Create the bucket using awscli

$ aws s3api create-bucket \

--bucket ${STATE_BUCKET} \

--region ${REGION}If the name is taken and you receive an error - change the name and try again.

Next, rather than typing the different command argument every time, it's much easier to export the KOPS_STATE_STORE and NAME variables to previously setup bucket name and a cluster name that ends with .k8s.local, for example:

$ export NAME="mycoolcluster.k8s.local" #SHOULD END WITH .k8s.local

$ export KOPS_STATE_STORE="s3://${STATE_BUCKET}"After that - generate a dummy SSH key for kops to use:

$ ssh-keygen -b 2048 -t rsa -f ${HOME}/.ssh/id_rsa -q -N ""To install helm, run the following:

$ wget https://get.helm.sh/helm-v3.11.2-linux-amd64.tar.gz

$ tar -zxvf helm-v3.11.2-linux-amd64.tar.gz

$ sudo mv linux-amd64/helm /usr/local/bin/helmFinally, install additional software and make sure the aws cli is updated to the latest version:

$ curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" && \

unzip awscliv2.zip && \

sudo ./aws/install --update

$ sudo yum install git jq go -yCreate Cluster

Now you are ready to create the cluster. We're going to create a production ready high availability cluster with 3 masters and 3 nodes:

$ kops create cluster \

--name ${NAME} \

--state ${KOPS_STATE_STORE} \

--node-count 3 \

--master-count=3 \

--zones us-east-1a \

--master-zones us-east-1a,us-east-1b,us-east-1c \

--node-size t2.large \

--master-size t2.medium \

--master-volume-size=20 \

--node-volume-size=20 \

--networking flannelWhen cluster configuration is ready, edit it:

$ kops edit cluster ${NAME}In the editor find the iam section at the end of the spec that looks like this:

...

spec:

...

iam:

allowContainerRegistry: true

legacy: false

...Edit it so it looks like the next snippet:

...

spec:

...

iam:

allowContainerRegistry: true

legacy: false

permissionsBoundary: arn:aws:iam::ACCOUNT_ID_HERE:policy/CustomPowerUserBound

...After saving the document, run the following set of commands:

$ kops update cluster ${NAME} --yes --adminYou can either wait 5-10 minutes till the cluster is ready or proceed to the next steps.

Setup Amazon ECR

Export the required variables.

$ export REGION="us-east-1"

$ export REPO_PREFIX="your-name"After that you can run the following command to create a repository for the demo project.

$ aws ecr create-repository \

--repository-name "${REPO_PREFIX}-coolapp" \

--image-scanning-configuration scanOnPush=true \

--region "${REGION}"Go to the AWS Developer Tools console.

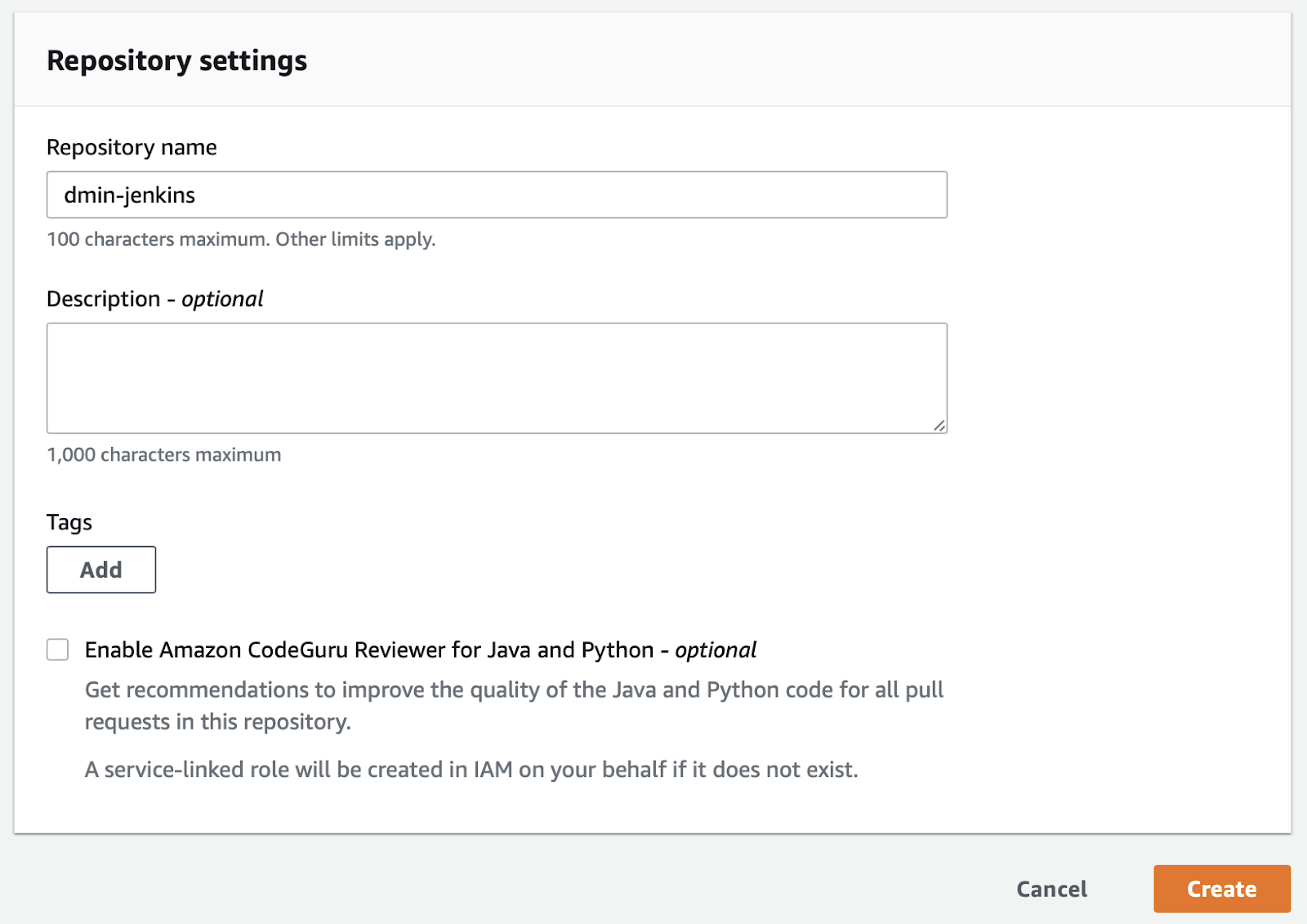

From the Developer tools left menu, in the Source section, choose Get started and then Create Repository button.

Provide a Repository Name of YOUR_NAME-jenkins (replacing YOUR_NAME with your name 😀 ) and click Create.

In the Cloud Shell, enter the following to create a folder called devops. Then change to the folder you just created.

$ mkdir devops

$ cd devopsNow clone the empty repository you just created.

$ export YOUR_NAME=your-name-here

$ sudo yum install python-pip -y

$ pip install --user git-remote-codecommit

$ git clone codecommit::us-east-1://${YOUR_NAME}-jenkinsThe previous command created an empty folder called YOUR_NAME-jenkins. Change to that folder.

$ cd ${YOUR_NAME}-jenkinsCreate the application

You need some source code to manage. So, you will create a simple Go web application.

In Kops instance Shell, type the following to create a Golang module.

$ go mod init example.devopsNext, type the following to create a Golang starting point.

$ vi main.goIn the Vi Editor paste the following:

package main

import (

"fmt"

"log"

"net/http"

"os"

)

type Server struct{}

func (s *Server) ServeHTTP(w http.ResponseWriter, r *http.Request) {

environment := "production"

if en := os.Getenv("DEVOPS_ENV"); en != "" {

environment = en

}

w.WriteHeader(http.StatusOK)

w.Header().Set("Content-Type", "application/json")

w.Write([]byte(fmt.Sprintf(`{"message": "hello from %s"}`, environment)))

}

func main() {

s := &Server{}

http.Handle("/", s)

log.Fatal(http.ListenAndServe(":8080", nil))

}Add a new file called gitignore.

$ vi .gitignoreIn the Vi Editor add the following code and save the file.

# Binaries for programs and plugins

*.exe

*.exe~

*.dll

*.so

*.dylib

# Test binary, built with `go test -c`

*.test

# Output of the go coverage tool, specifically when used with LiteIDE

*.out

# Dependency directories (remove the comment below to include it)

vendor/

.DS_StoreYou have some files now, let's save them to the repository. First, you need to add all the files you created to your local Git repo. Run the following:

$ cd ~/devops/${YOUR_NAME}-jenkins

$ git add --allNow, let's commit the changes locally.

$ git commit -a -m "Initial Commit"$ git config user.email "you@example.com"

$ git config user.name "Your Name"You committed the changes locally, but have not updated the Git repository you created in Amazon Cloud. Enter the following command to push your changes to the cloud.

$ git push origin masterGo to the CodeCommit and select Repositories web page. Find your repository and navigate to its page. You should see the files you just created.

Test the application locally (Optional)

Back in Kops instance, make sure you are in your application's root folder. To run the program, type:

$ cd ~/devops/${YOUR_NAME}-jenkins

$ go fmt

$ go run main.goTo see the program running, Open a new Cloud Shell tab and run:

$ curl http://localhost:8080

{"message": "hello from production"}To stop the program, switch back to the first tab and press Ctrl+C. You can close the second tab for now.

Creating the Dockerfile for your application

The first step to using Docker is to create a file called Dockerfile.

$ vi DockerfileEnter the following and Save it.

FROM scratch

WORKDIR /app

COPY ./app .

ENTRYPOINT [ "./app" ]Next, add a dockerignore file, so that our git repository won't end up in the container:

$ vi .dockerignoreAnd paste the following:

**/.gitEnter the following to make sure you are in the right folder and add your new Dockerfile to Git.

$ cd ~/devops/${YOUR_NAME}-jenkins

$ git add --all

$ git commit -a -m "Added Docker Support"Push your changes to the master repository using the following command.

$ git push origin masterGo back to CodeCommit in the AWS Management Console (it will be in another browser tab) and refresh the repository and verify your changes were uploaded.

Create a unit test

First, create a simple test to test the application. Create the file main_test.go:

$ cd ~/devops/${YOUR_NAME}-jenkins/

$ vi main_test.goAnd paste the following content using Vi Editor:

package main

import (

"io/ioutil"

"net/http"

"net/http/httptest"

"testing"

)

func TestMyHandler(t *testing.T) {

handler := &Server{}

server := httptest.NewServer(handler)

defer server.Close()

resp, err := http.Get(server.URL)

if err != nil {

t.Fatal(err)

}

if resp.StatusCode != 200 {

t.Fatalf("Received non-200 response: %d\n", resp.StatusCode)

}

expected := `{"message": "hello from production"}`

actual, err := ioutil.ReadAll(resp.Body)

if err != nil {

t.Fatal(err)

}

if expected != string(actual) {

t.Errorf("Expected the message '%s'\n", expected)

}

}Verify the test is working by running the following:

$ go test ./ -v -shortFinally, push the application code to CodeCommit Repositories.

$ cd ~/devops/${YOUR_NAME}-jenkins

$ git add --all

$ git commit -a -m "Added Unit Test"

$ git push origin masterNow we are ready to deploy Jenkins.

Create IAM User

To access CodeCommit from Jenkins we need to have an IAM User with required permissions. Run the following to create the user:

$ export ACCOUNT_ID=`aws sts get-caller-identity --query "Account" --output text`

$ aws iam create-user --user-name Training-${YOUR_NAME}-jenkins \

--permissions-boundary arn:aws:iam::${ACCOUNT_ID}:policy/CustomPowerUserBoundNext, attach the AWSCodeCommitPowerUser policy to it:

$ export POLICY_ARN=$(aws iam list-policies --query 'Policies[?PolicyName==`AWSCodeCommitPowerUser`].{ARN:Arn}' --output text)

$ aws iam attach-user-policy \

--user-name Training-${YOUR_NAME}-jenkins \

--policy-arn $POLICY_ARNNext, upload your Cloud Shell public SSH key as this user's SSH key. We're going to use it to access CodeCommit.

$ aws iam upload-ssh-public-key \

--user-name Training-${YOUR_NAME}-jenkins \

--ssh-public-key-body file://${HOME}/.ssh/id_rsa.pub

Output:

{

"SSHPublicKey": {

"UserName": "Training-YOUR_NAME-jenkins",

"SSHPublicKeyId": "AXXXXXXX",

"Fingerprint": "xxx",

"SSHPublicKeyBody": "ssh-rsa the_key",

"Status": "Active",

"UploadDate": "xxxx"

}

}Create a Kubernetes Secret from the private SSH key file to be able to access it in Jenkins.

$ kubectl create secret generic jenkins-ssh \

--from-file=${HOME}/.ssh/id_rsaCreate values.yaml

Change to devops directory and create a values.yaml file with basic Jenkins Chart overrides:

$ cd ~/devops/

$ vi values.yamlAnd paste the following:

controller:

sidecars:

configAutoReload:

enabled: true

installPlugins:

- kubernetes:latest

- workflow-aggregator:latest

- git:latest

- configuration-as-code:latest

additionalPlugins:

- ssh-credentials:latest

- pipeline-stage-view:latest

resources:

requests:

cpu: "50m"

memory: "1024Mi"

limits:

cpu: "1000m"

memory: "2048Mi"

javaOpts: "-Xms2048m -Xmx2048m"

serviceType: LoadBalancer

JCasC:

configScripts:

setup-ssh-keys: |

credentials:

system:

domainCredentials:

- credentials:

- basicSSHUserPrivateKey:

scope: GLOBAL

id: codecommit

username: git

description: "SSH key for CodeCommit"

privateKeySource:

directEntry:

privateKey: "${readFile:/run/secrets/id_rsa}"

security:

gitHostKeyVerificationConfiguration:

sshHostKeyVerificationStrategy: "noHostKeyVerificationStrategy"

persistence:

enabled: true

volumes:

- name: jenkins-ssh

secret:

secretName: jenkins-ssh

mounts:

- name: jenkins-ssh

mountPath: /run/secrets/id_rsa

subPath: id_rsa

readOnly: true

agent:

resources:

requests:

cpu: "500m"

memory: "256Mi"

limits:

cpu: "1000m"

memory: "512Mi"

serviceAccountAgent:

create: trueHere we configure resources for Jenkins Controller and Agent as well as mount our Kubernetes Secret so we can create a Jenkins Credential that can be used inside the pipeline. We do that using Jenkins Configuration as Code.

Now we can install Jenkins by adding the Stable Helm Chart Repository and executing install:

$ helm repo add jenkinsci https://charts.jenkins.io

$ helm repo update

$ helm install my-release jenkinsci/jenkins --values values.yamlFor agents to be able to deploy applications to our cluster we need to have RBAC roles set up. To create and bind the role to the agent service account - copy the following into the console (provided your jenkins chart release name is my-release). Our role will be scoped to entire cluster as we want to deploy our application in different namespaces for testing:

$ cat <<EOF | kubectl apply -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: jenkins-deploy

rules:

- apiGroups:

- extensions

- apps

- v1

- ""

resources:

- containers

- endpoints

- services

- pods

- replicasets

- secrets

- namespaces

- deployments

verbs:

- create

- get

- list

- patch

- update

- watch

- delete

- apiGroups:

- v1

- ""

resources:

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: jenkins-edit

namespace: default

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: jenkins-deploy

subjects:

- kind: ServiceAccount

name: my-release-jenkins-agent

namespace: default

EOFNow you are ready to connect to Jenkins. Enter the following to get Admin password:

$ printf $(kubectl get secret --namespace default my-release-jenkins -o jsonpath="{.data.jenkins-admin-password}" | base64 --decode);echoAnd login URL:

$ export SERVICE_IP=$(kubectl get svc --namespace default my-release-jenkins --template "{{ range (index .status.loadBalancer.ingress 0) }}{{ . }}{{ end }}")

$ echo http://$SERVICE_IP:8080/loginEnter the URL into the browser.

You should see the Jenkins Login screen.

Enter username admin and the password you got earlier.

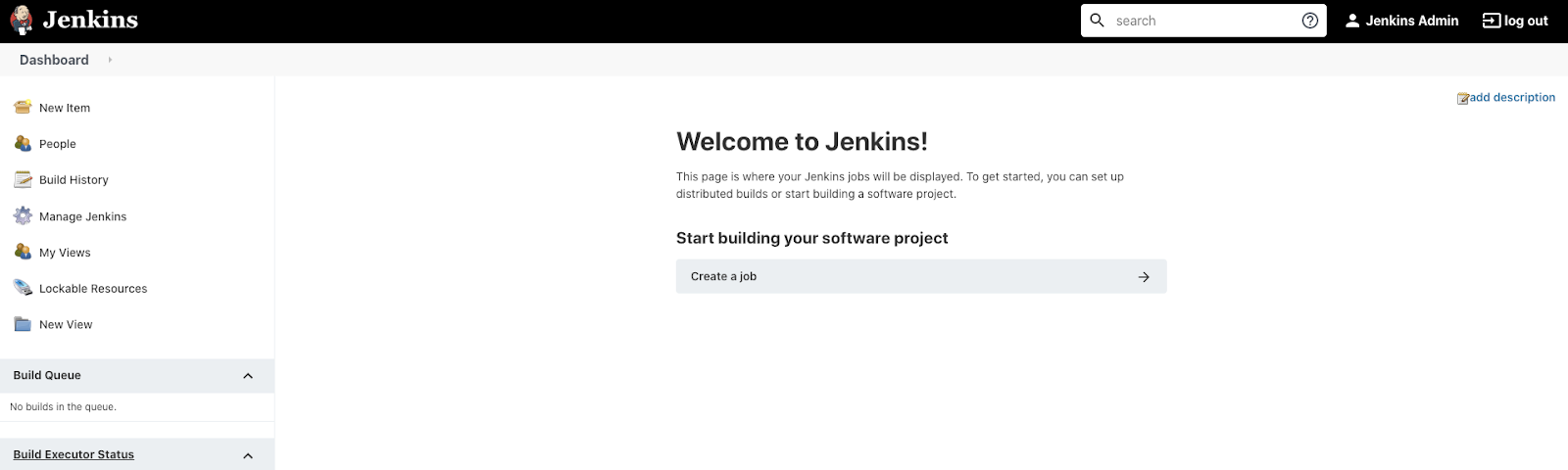

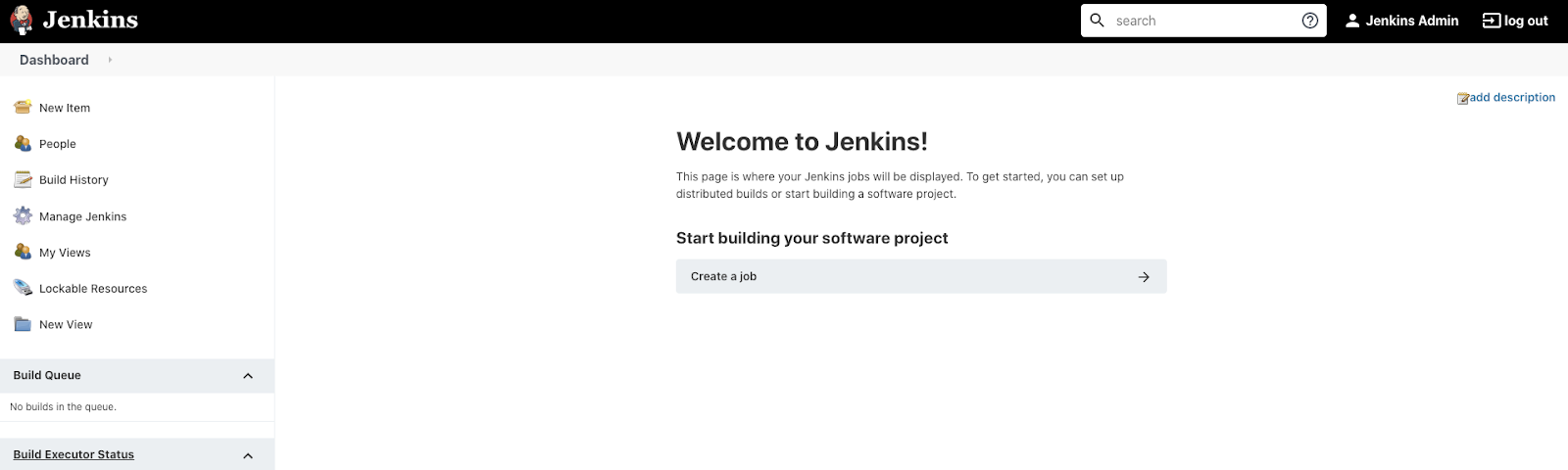

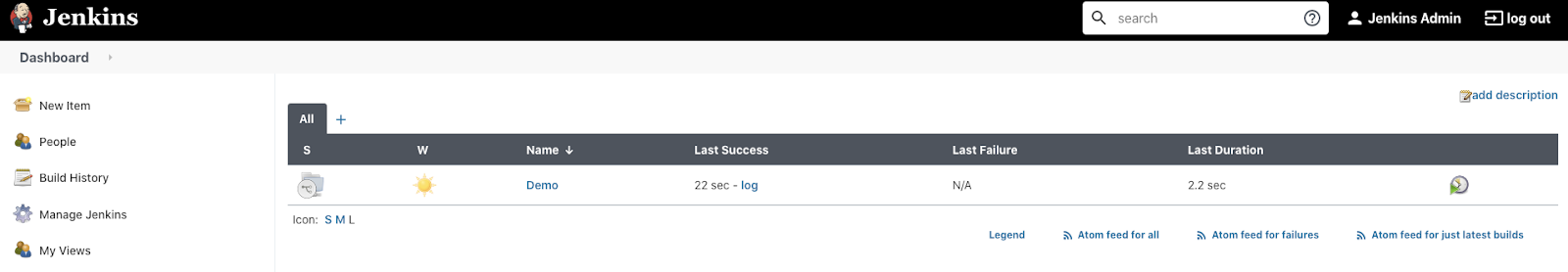

If login is successful - you will see the Jenkins main page:

Verify the SSH Key

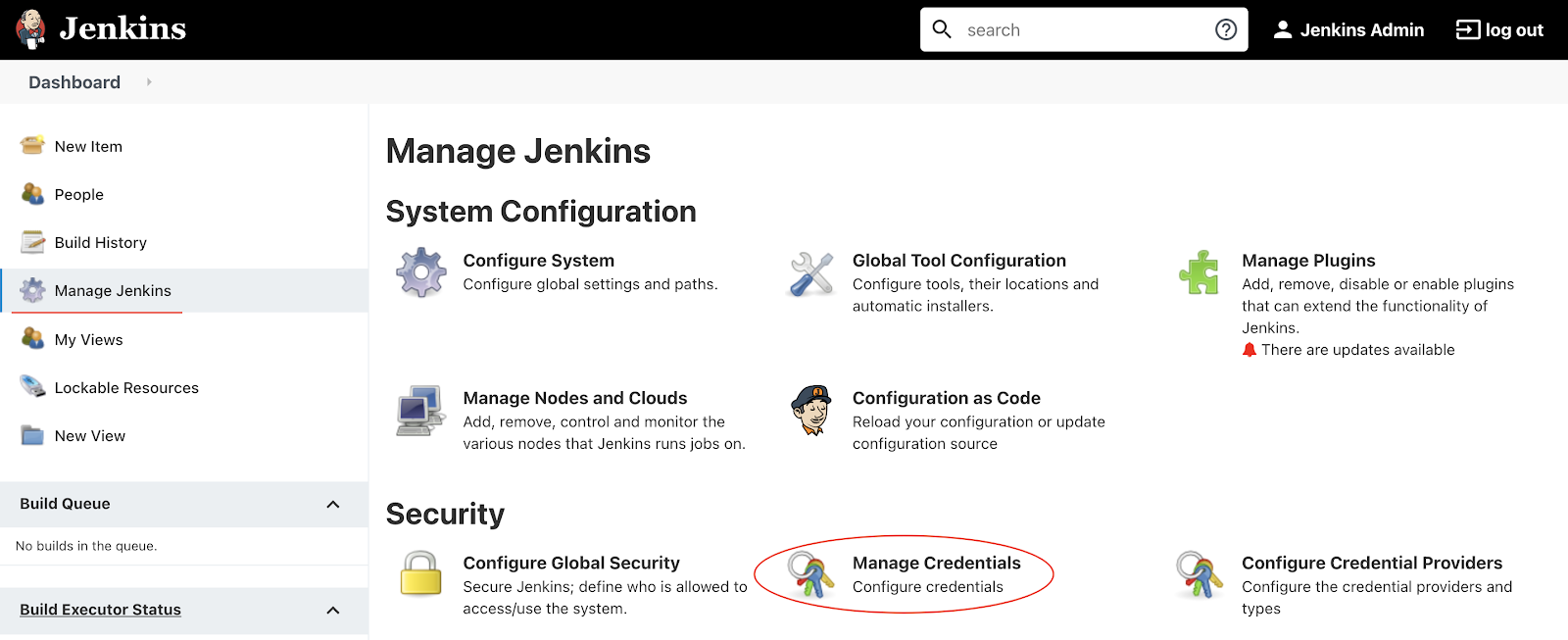

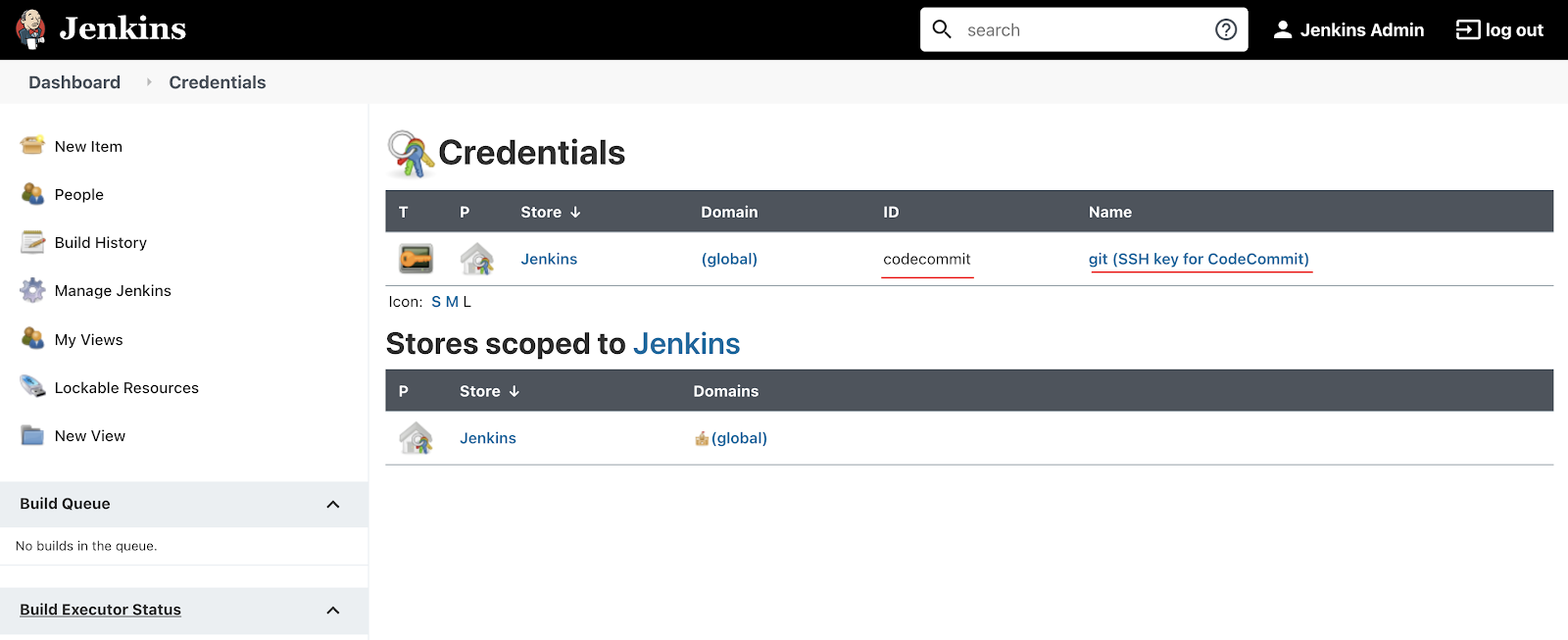

Back on the Jenkins page, on the left side pane, click Manage Jenkins, then Manage Credentials.

You should see that the credentials are stored successfully.

Back on the main Jenkins page, click the "Create a job" button:

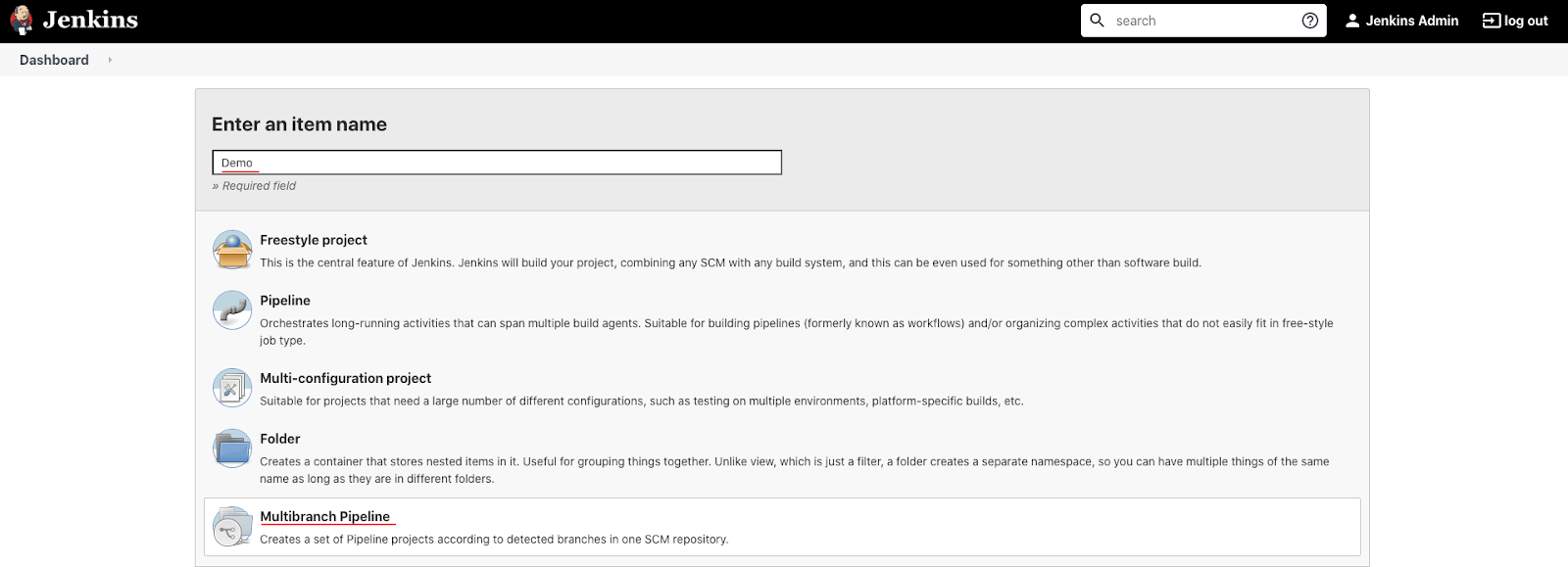

Then enter the project name and select Multibranch Pipeline project type, then click OK.

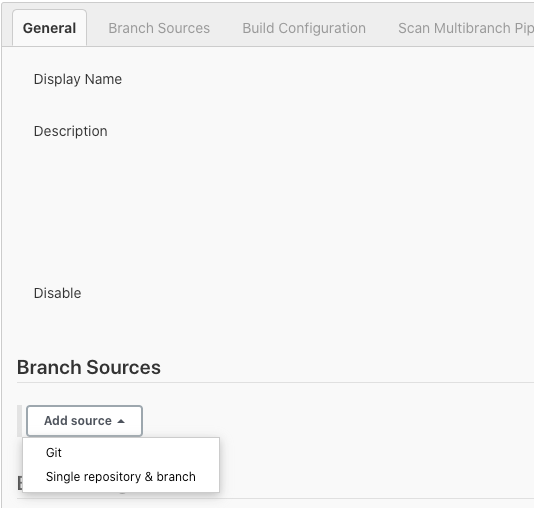

Click Add source and choose git.

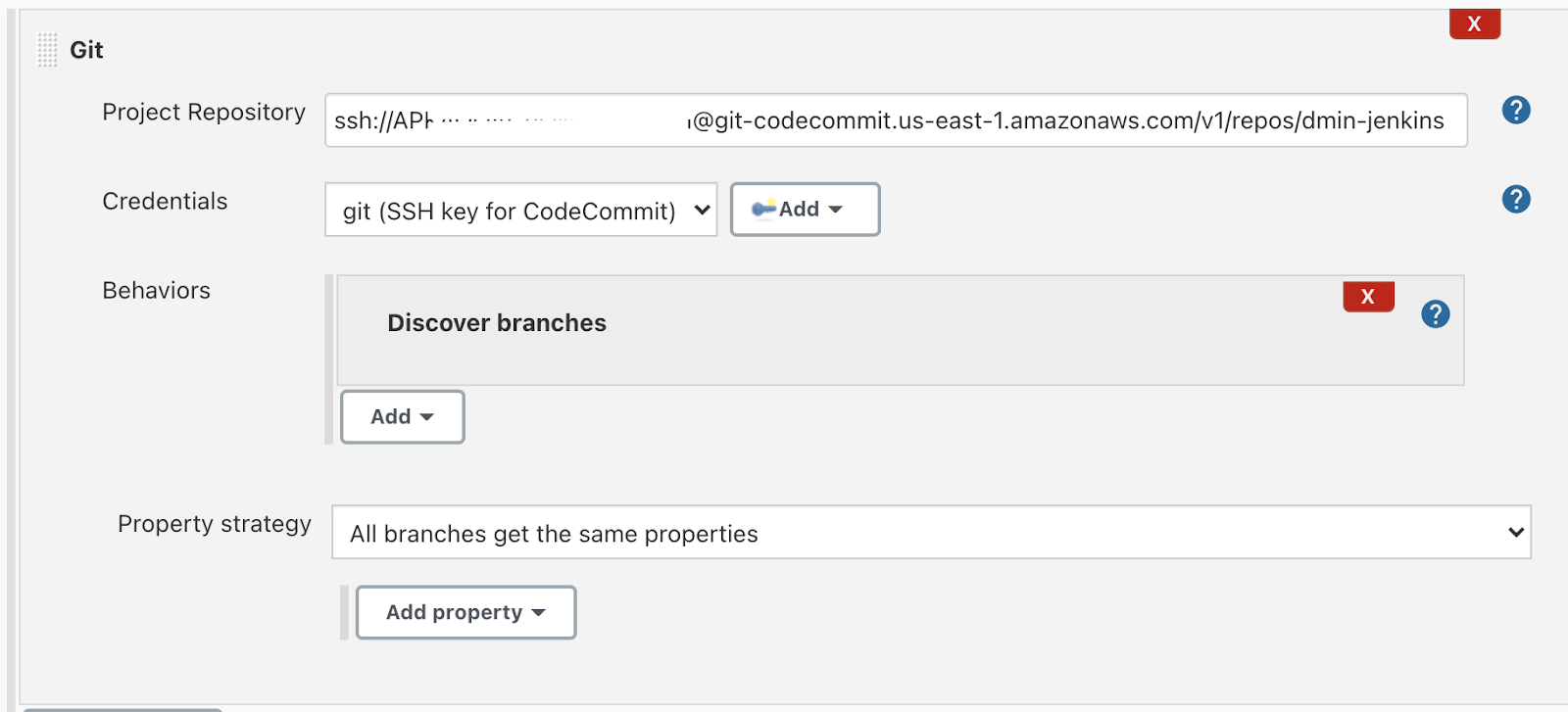

Paste the SSH clone URL of your YOUR_NAME-jenkins repo on CodeCommit Repositories into the Project Repository field. It will look like:

ssh://SSHPublicKeyId@git-codecommit.us-east-1.amazonaws.com/v1/repos/YOUR_NAME-jenkins

For example (do not copy):

ssh://APXXXXXXXXXXI@git-codecommit.us-east-1.amazonaws.com/v1/repos/dmin-jenkins

From the Credentials dropdown, select the name of the credential that was created with SSH secret. It should have the format git(SSH key for CodeCommit).

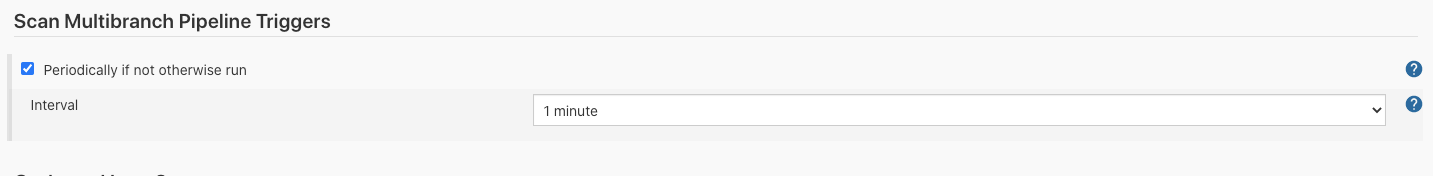

Under the Scan Multibranch Pipeline Triggers section, check the Periodically if not otherwise run box, then set the Interval value to 1 minute.

Click Save, leaving all other options with default values.

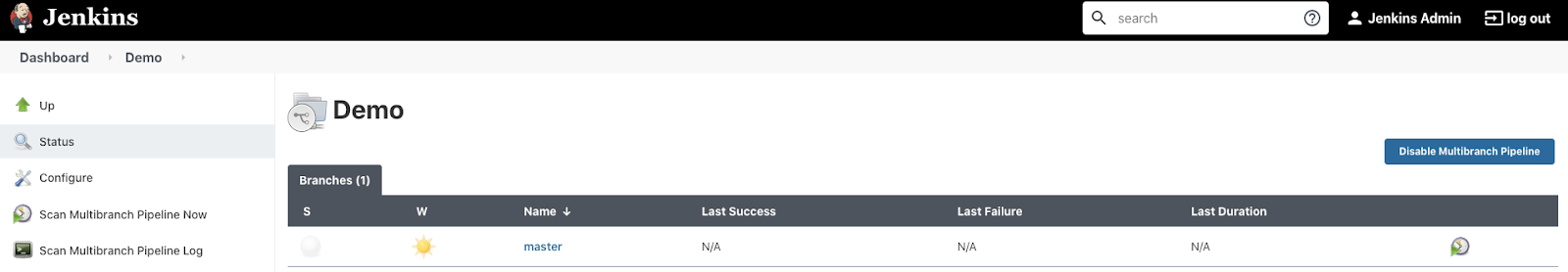

A Branch indexing job was kicked off to identify any branches in your repository.

Click Dashboard > Demo, in the top menu.

You should see there are no branches found that contain buildable projects. It means we need to configure a Jenkins file for our project.

Create Jenkinsfile

Let's run unit tests every time we push new commits, and if the tests are OK - create and push a new Docker image to ECR.

In kops instance terminal shell, change to the project directory and create a Jenkinsfile with basic configuration:

$ cd ~/devops/${YOUR_NAME}-jenkins

$ vi JenkinsfilePaste the following:

pipeline {

agent none

environment {

ACCOUNT_ID = "AWS_ACCOUNT_ID"

REPO_PREFIX = "AWS_REPO_PREFIX"

BUILD_CONTEXT_BUCKET = "${REPO_PREFIX}-artifacts-jenkins"

BUILD_CONTEXT = "build-context-${BRANCH_NAME}.${BUILD_NUMBER}.tar.gz"

ECR_IMAGE = "${ACCOUNT_ID}.dkr.ecr.us-east-1.amazonaws.com/${REPO_PREFIX}-coolapp:${BRANCH_NAME}.${BUILD_NUMBER}"

}

stages {

stage('Test and Build the App') {

agent {

kubernetes {

yaml """

apiVersion: v1

kind: Pod

metadata:

name: coolappbuilder

labels:

robot: builder

spec:

serviceAccount: my-release-jenkins-agent

containers:

- name: jnlp

- name: golang

image: golang:1.15

command:

- cat

tty: true

- name: aws

image: amazon/aws-cli

command:

- /bin/bash

args:

- -c

- \"trap : TERM INT; sleep infinity & wait\"

"""

}

}

steps {

container(name: 'golang', shell: '/bin/bash') {

// run tests

sh 'go test ./ -v -short'

// generate artifact

sh 'CGO_ENABLED=0 GOOS=linux GOARCH=amd64 go build -a -ldflags \'-w -s -extldflags "-static"\' -o app .'

// archive the build context for kaniko.

// It's unnecessary to archive everything, but for the lab it's ok :)

sh "tar --exclude='./.git' -zcvf /tmp/$BUILD_CONTEXT ."

sh "mv /tmp/$BUILD_CONTEXT ."

}

container(name: 'aws', shell: '/bin/bash') {

sh "aws s3 cp ${BUILD_CONTEXT} s3://${BUILD_CONTEXT_BUCKET}"

}

}

}

stage('Build image with Kaniko') {

agent {

kubernetes {

yaml """

apiVersion: v1

kind: Pod

metadata:

name: coolapppusher

labels:

robot: pusher

spec:

serviceAccount: my-release-jenkins-agent

containers:

- name: jnlp

- name: kaniko

image: gcr.io/kaniko-project/executor:v1.8.1-debug

imagePullPolicy: Always

command:

- /busybox/cat

tty: true

volumeMounts:

- name: docker-config

mountPath: /kaniko/.docker/

env:

- name: AWS_REGION

value: us-east-1

volumes:

- name: docker-config

configMap:

name: docker-config

"""

}

}

environment {

PATH = "/busybox:/kaniko:$PATH"

}

steps {

container(name: 'kaniko', shell: '/busybox/sh') {

sh '''#!/busybox/sh

/kaniko/executor -f `pwd`/Dockerfile -c `pwd` --context="s3://${BUILD_CONTEXT_BUCKET}/${BUILD_CONTEXT}" --verbosity debug --destination ${ECR_IMAGE}

'''

}

}

}

}

}Substitute the account id and repo prefix variables:

$ export ACCOUNT_ID=`aws sts get-caller-identity --query "Account" --output text`

$ sed -i -e "s/AWS_ACCOUNT_ID/${ACCOUNT_ID}/g" \

-e "s/AWS_REPO_PREFIX/${REPO_PREFIX}/g" JenkinsfileNext, create a bucket that is going to be used to store artifacts:

$ aws s3api create-bucket \

--bucket ${REPO_PREFIX}-artifacts-jenkins \

--region ${REGION}Allow the Kops cluster to use S3 and ECR. First, edit the cluster:

$ kops edit cluster ${NAME}Find the spec that looks like this:

...

spec:

api:

loadBalancer:

class: Classic

...And modify it to insert the policy as follows:

...

spec:

additionalPolicies:

node: |

[

{

"Effect": "Allow",

"Action": ["s3:*"],

"Resource": ["*"]

},

{

"Effect": "Allow",

"Action": [

"ecr:GetAuthorizationToken",

"ecr:BatchCheckLayerAvailability",

"ecr:GetDownloadUrlForLayer",

"ecr:GetRepositoryPolicy",

"ecr:DescribeRepositories",

"ecr:ListImages",

"ecr:DescribeImages",

"ecr:BatchGetImage",

"ecr:GetLifecyclePolicy",

"ecr:GetLifecyclePolicyPreview",

"ecr:ListTagsForResource",

"ecr:DescribeImageScanFindings",

"ecr:InitiateLayerUpload",

"ecr:UploadLayerPart",

"ecr:CompleteLayerUpload",

"ecr:PutImage"

],

"Resource": "*"

}

]

api:

loadBalancer:

class: Classic

...Next, disable instance metadata protection so the pods can use instance's roles directly. First, edit the node group:

$ kops edit ig nodes-us-east-1aFind the spec that looks like this:

...

spec:

image: 099720109477/ubuntu/...

instanceMetadata:

httpPutResponseHopLimit: 1

httpTokens: required

machineType: t2.large

...And remove the instanceMetadata section as follows:

...

spec:

image: 099720109477/ubuntu/...

machineType: t2.large

...Update the cluster:

$ kops update cluster ${NAME} --yes

$ kops rolling-update cluster --yesWhen you're done - we need to create a Kubernetes Config Map holding settings for our Kaniko builder to access ECR using IAM policy provided by the updated instance role:

$ cat << EOF | kubectl create -f -

apiVersion: v1

kind: ConfigMap

metadata:

name: docker-config

data:

config.json: |-

{ "credsStore": "ecr-login" }

EOFFinally, push the application code to CodeCommit Repositories.

$ cd ~/devops/${YOUR_NAME}-jenkins

$ git add --all

$ git commit -a -m "Added Jenkinsfile"

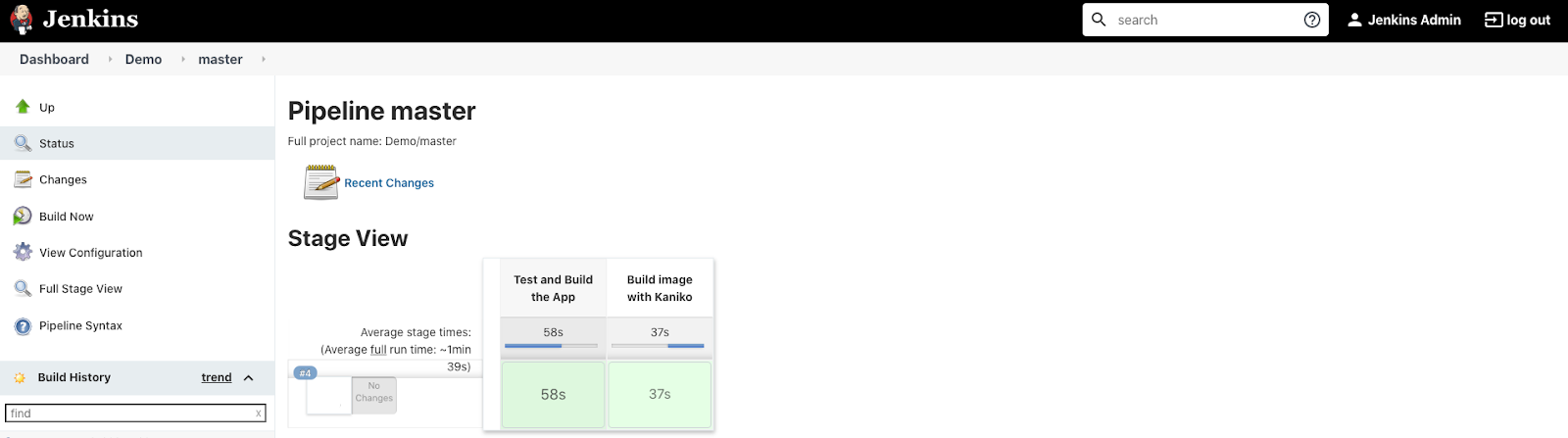

$ git push origin masterGo to the Jenkins Web Interface and click the Demo project.

You should see a build executing for the master branch:

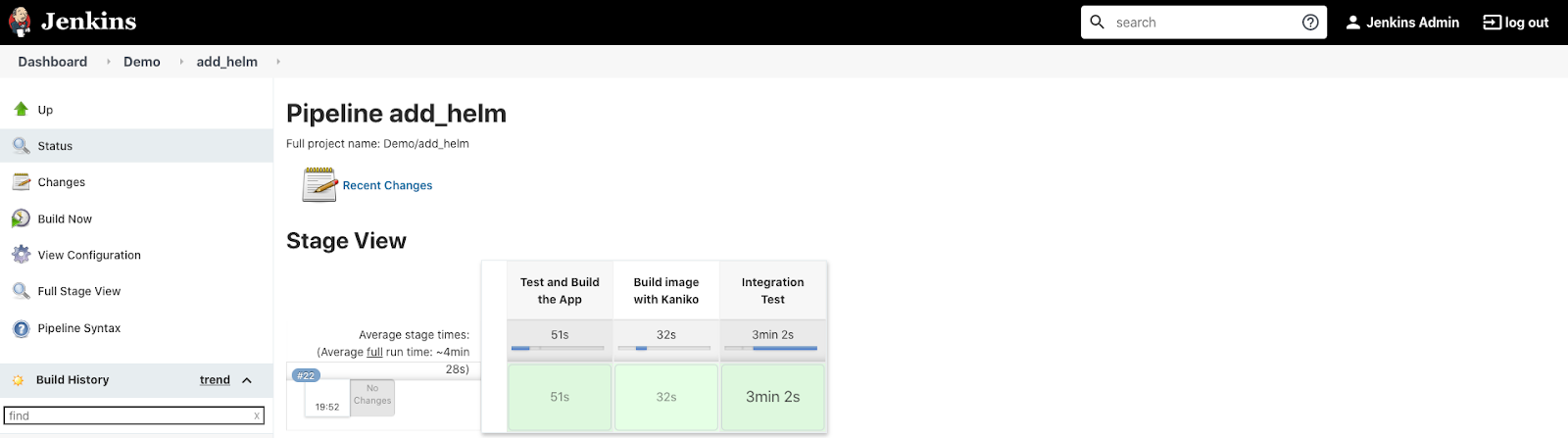

Click the master branch to see the execution details. You should see the stages executing one by one:

When the pipeline completes, in AWS Console, go to the Elastic Container Registry service.

Click on your repository. You should see your image in the list.

Next, go to S3.

Find your artifact store bucket and verify that your versioned artifact is present.

Jenkinsfile review

Before we move on to the next step let's review the Jenkinsfile configuration.

Pipeline keyword means that this is a declarative pipeline

pipeline {

...

}Next, we have disabled agent configuration for the job by specifying agent none, as we will have each stage define its own agent config.

Next, the environment section configures the environment variables for the entire pipeline.

environment {

...

}Next, the stages section describes our pipeline. Each stage can have a name so developers can understand what parts of the pipeline are currently executing.

Stage consists of steps - multiple commands to execute.

The first stage, Test and Build the App, has it's agent configuration specified as a Pod manifest for Kubernetes plugin (the Pod Template used for executing the build). We specify the service account to use with this agent Pod.

We have 3 containers needed for the job:

- Default Jenkins agent - jnlp

- golang - to compile the application

- aws - AWS CLI for S3 Access

stage('Test and Build the App') {

agent {

agent {

kubernetes {

yaml """

apiVersion: v1

kind: Pod

metadata:

name: coolappbuilder

labels:

robot: builder

spec:

serviceAccount: my-release-jenkins-agent

containers:

- name: jnlp

- name: golang

image: golang:1.15

command:

- cat

tty: true

- name: aws

image: amazon/aws-cli

command:

- /bin/bash

args:

- -c

- \"trap : TERM INT; sleep infinity & wait\"

"""

}

}

steps {

...

}

}

}The first stage's steps test our application, compile it and then archive it and upload to S3 Storage for later use. We specify which container we should use in the pod to execute the steps. (as we have 2 defined). To upload to S3 Storage we use AWS CLI.

steps {

container(name: 'golang', shell: '/bin/bash') {

// run tests

sh 'go test ./ -v -short'

// generate artifact

sh 'CGO_ENABLED=0 GOOS=linux GOARCH=amd64 go build -a -ldflags \'-w -s -extldflags "-static"\' -o app .'

// archive the build context for kaniko.

// It's unnecessary to archive everything, but for the lab it's ok :)

sh "tar --exclude='./.git' -zcvf /tmp/$BUILD_CONTEXT ."

sh "mv /tmp/$BUILD_CONTEXT ."

}

container(name: 'aws', shell: '/bin/bash') {

sh "aws s3 cp ${BUILD_CONTEXT} s3://${BUILD_CONTEXT_BUCKET}"

}

}The second stage, Build image with Kaniko, also has it's agent configuration specified as a Pod manifest. We specify the service account to use with this agent Pod as well.

We have 2 containers needed for the job:

- Default Jenkins agent - jnlp

- kaniko - to build the image (will be discussed later)

Here, we additionally mount our ConfigMap to the Kaniko container so it knows how to access the ECR.

stage('Build image with Kaniko') {

agent {

kubernetes {

yaml """

apiVersion: v1

kind: Pod

metadata:

name: coolapppusher

labels:

robot: pusher

spec:

serviceAccount: my-release-jenkins-agent

containers:

- name: jnlp

- name: kaniko

image: gcr.io/kaniko-project/executor:debug

imagePullPolicy: Always

command:

- /busybox/cat

tty: true

volumeMounts:

- name: docker-config

mountPath: /kaniko/.docker/

env:

- name: AWS_REGION

value: us-east-1

volumes:

- name: docker-config

configMap:

name: docker-config

"""

}

}

steps {

...

}

}

}When we have a compiled app ready - we can build the Docker image. But to build it in our cluster using Docker we need to give our agents root access to underlying Docker daemon, and therefore, the system. To solve this problem we can use any other build agent that does not require high privileges and can build images in userspace without actual Docker daemon. Kaniko is one of the popular such executors. This is the minimum viable image and application that has a simplified file system so the configuration is a bit odd. To run the Kaniko executor we need to minimally pass docker context (with Dockerfile and files to copy if any) and destination - docker repository where Kaniko will push the image.

...

environment {

PATH = "/busybox:/kaniko:$PATH"

}

steps {

container(name: 'kaniko', shell: '/busybox/sh') {

sh '''#!/busybox/sh

/kaniko/executor -f `pwd`/Dockerfile -c `pwd` --context="s3://${BUILD_CONTEXT_BUCKET}/${BUILD_CONTEXT}" --verbosity debug --destination ${ECR_IMAGE}

'''

}

}

...The Docker file used in the project is pretty simplified too. As we have a statically linked binary of our simple application - we don't actually need any OS or extra libraries on the container and can use the scratch base image.

FROM scratch

WORKDIR /app

COPY ./app .

ENTRYPOINT [ "./app" ]We know that the application should be working based on passed unit tests, but is it possible to deploy it to production? Let's create a Helm chart to deploy it and test if the chart is working.

Create a Helm Chart

Create a new branch and create a new chart named coolapp in our demo directory:

$ cd ~/devops/${YOUR_NAME}-jenkins

$ git checkout -b add_helm

$ helm create coolappEdit Chart.yaml:

$ vi coolapp/Chart.yamlAnd change it so that it looks like this:

apiVersion: v2

name: coolapp

description: Example application deployment

type: application

version: 0.1.0

appVersion: 1.0Edit values.yaml. We have only a handful of variables with default values, as other will be only available during Jenkins build:

$ vi coolapp/values.yamlAnd change it so that it looks like this:

replicaCount: 1

service:

type: ClusterIP

port: 80Remove the unused *.yaml and NOTES.txt.

$ rm ./coolapp/templates/*.yaml

$ rm ./coolapp/templates/NOTES.txtCreate new Deployment template:

$ cat <<EOF >./coolapp/templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ include "coolapp.fullname" . }}

namespace: {{ .Release.Namespace }}

labels:

{{- include "coolapp.labels" . | nindent 4 }}

spec:

replicas: {{ .Values.replicaCount }}

selector:

matchLabels:

{{- include "coolapp.selectorLabels" . | nindent 6 }}

template:

metadata:

labels:

{{- include "coolapp.selectorLabels" . | nindent 8 }}

spec:

containers:

- name: {{ .Chart.Name }}

image: "{{ .Values.accountId }}.dkr.ecr.us-east-1.amazonaws.com/{{ .Values.prefix }}-coolapp:{{ .Values.tag }}"

ports:

- name: http

containerPort: 8080

protocol: TCP

livenessProbe:

httpGet:

path: /

port: http

readinessProbe:

httpGet:

path: /

port: http

EOFCreate new Service template:

$ cat <<EOF >./coolapp/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: {{ include "coolapp.fullname" . }}

namespace: {{ .Release.Namespace }}

labels:

{{- include "coolapp.labels" . | nindent 4 }}

spec:

type: {{ .Values.service.type }}

ports:

- port: {{ .Values.service.port }}

targetPort: http

protocol: TCP

name: http

selector:

{{- include "coolapp.selectorLabels" . | nindent 4 }}

EOFCreate new Notes template:

$ cat <<EOF >./coolapp/templates/NOTES.txt

Get the application URL by running these commands:

{{- if contains "NodePort" .Values.service.type }}

export NODE_PORT=\$(kubectl get --namespace {{ .Release.Namespace }} -o jsonpath="{.spec.ports[0].nodePort}" services {{ include "coolapp.fullname" . }})

export NODE_IP=\$(kubectl get nodes --namespace {{ .Release.Namespace }} -o jsonpath="{.items[0].status.addresses[0].address}")

echo http://\$NODE_IP:\$NODE_PORT

{{- else if contains "LoadBalancer" .Values.service.type }}

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status of by running 'kubectl get --namespace {{ .Release.Namespace }} svc -w {{ include "coolapp.fullname" . }}'

export SERVICE_IP=\$(kubectl get svc --namespace {{ .Release.Namespace }} {{ include "coolapp.fullname" . }} --template "{{"{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}"}}")

echo http://\$SERVICE_IP:{{ .Values.service.port }}

{{- else if contains "ClusterIP" .Values.service.type }}

export POD_NAME=\$(kubectl get pods --namespace {{ .Release.Namespace }} -l "app.kubernetes.io/name={{ include "coolapp.name" . }},app.kubernetes.io/instance={{ .Release.Name }}" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace {{ .Release.Namespace }} port-forward \$POD_NAME 8080:80

{{- end }}

EOFLet's test that template engine is working:

$ cd ~/devops/${YOUR_NAME}-jenkins

$ helm install --dry-run --debug test \

--set accountId=${ACCOUNT_ID} \

--set prefix=${REPO_PREFIX} \

--set tag=latest ./coolappThe templating should be working, so we're ready to test it automatically.

Create an integration test

Create a Go module:

$ mkdir -p ~/devops/${YOUR_NAME}-jenkins/test

$ cd ~/devops/${YOUR_NAME}-jenkins/test

$ go mod init integrationCreate an integration test:

$ vi helm_test.goAnd paste the following content:

package test

import (

"fmt"

"log"

"os"

"regexp"

"strings"

"testing"

"time"

"github.com/gruntwork-io/terratest/modules/helm"

http_helper "github.com/gruntwork-io/terratest/modules/http-helper"

"github.com/gruntwork-io/terratest/modules/k8s"

"github.com/gruntwork-io/terratest/modules/random"

)

func TestChartDeploysApplication(t *testing.T) {

// Get required variables

namespace := "default"

if ns := os.Getenv("TEST_NAMESPACE"); ns != "" {

reg, err := regexp.Compile("[^a-zA-Z0-9]+")

if err != nil {

log.Fatal(err)

}

namespace = reg.ReplaceAllString(ns, "-")

}

account := os.Getenv("TEST_ACCOUNT_ID")

prefix := os.Getenv("TEST_PREFIX")

tag := os.Getenv("TEST_TAG")

// Path to the helm chart we will test

helmChartPath := "../coolapp"

// Setup the kubectl config and context. Here we choose to use the defaults, which is:

// - HOME/.kube/config for the kubectl config file

// - Current context of the kubectl config file

// We also specify that the current working namespace

kubectlOptions := k8s.NewKubectlOptions("", "", namespace)

// Setup the args.

options := &helm.Options{

SetValues: map[string]string{"accountId": account, "prefix": prefix, "tag": tag},

KubectlOptions: kubectlOptions,

}

// We generate a unique release name so that we can refer to after deployment.

// By doing so, we can schedule the delete call here so that at the end of the test, we run

// `helm delete RELEASE_NAME` to clean up any resources that were created.

releaseName := fmt.Sprintf("test-%s", strings.ToLower(random.UniqueId()))

defer helm.Delete(t, options, releaseName, true)

// At the end of the test, make sure to delete the namespace if it's not the default namespace

if namespace != "default" {

defer k8s.DeleteNamespace(t, kubectlOptions, namespace)

// Create the namespace

k8s.CreateNamespace(t, kubectlOptions, namespace)

}

// Deploy the chart using `helm install`.

helm.Install(t, options, helmChartPath, releaseName)

// Now that the chart is deployed, verify the deployment.

svcName := fmt.Sprintf("%s-coolapp", releaseName)

validateK8SApp(t, kubectlOptions, svcName)

}

// Validate the app is working

func validateK8SApp(t *testing.T, options *k8s.KubectlOptions, svcName string) {

// This will wait up to 20 seconds for the service to become available, to ensure that we can access it.

k8s.WaitUntilServiceAvailable(t, options, svcName, 10, 2*time.Second)

// Now we verify that the service will successfully boot and start serving requests

url := serviceUrl(t, options, svcName)

expectedStatus := 200

expectedBody := `{"message": "hello from production"}`

maxRetries := 10

timeBetweenRetries := 3 * time.Second

http_helper.HttpGetWithRetry(t, url, nil, expectedStatus, expectedBody, maxRetries, timeBetweenRetries)

}

// Get the service URL from Kubernetes

func serviceUrl(t *testing.T, options *k8s.KubectlOptions, svcName string) string {

service := k8s.GetService(t, options, svcName)

endpoint := k8s.GetServiceEndpoint(t, options, service, 80)

return fmt.Sprintf("http://%s", endpoint)

}Due to low computing capabilities of Cloud Shell local test runs might not even compile or take ages to do so. Let's modify our Jenkinsfile and run the test inside the cluster!

Change to the project directory and edit Jenkinsfile:

$ cd ~/devops/${YOUR_NAME}-jenkins

$ vi JenkinsfilePaste the following (replace everything!):

pipeline {

agent none

environment {

ACCOUNT_ID = "AWS_ACCOUNT_ID"

REPO_PREFIX = "AWS_REPO_PREFIX"

BUILD_CONTEXT_BUCKET = "${REPO_PREFIX}-artifacts-jenkins"

BUILD_CONTEXT = "build-context-${BRANCH_NAME}.${BUILD_NUMBER}.tar.gz"

ECR_IMAGE = "${ACCOUNT_ID}.dkr.ecr.us-east-1.amazonaws.com/${REPO_PREFIX}-coolapp:${BRANCH_NAME}.${BUILD_NUMBER}"

}

stages {

stage('Test and Build the App') {

agent {

kubernetes {

yaml """

apiVersion: v1

kind: Pod

metadata:

name: coolappbuilder

labels:

robot: builder

spec:

serviceAccount: my-release-jenkins-agent

containers:

- name: jnlp

- name: golang

image: golang:1.15

command:

- cat

tty: true

- name: aws

image: amazon/aws-cli

command:

- /bin/bash

args:

- -c

- \"trap : TERM INT; sleep infinity & wait\"

"""

}

}

steps {

container(name: 'golang', shell: '/bin/bash') {

// run tests

sh 'go test ./ -v -short'

// generate artifact

sh 'CGO_ENABLED=0 GOOS=linux GOARCH=amd64 go build -a -ldflags \'-w -s -extldflags "-static"\' -o app .'

// archive the build context for kaniko.

// It's unnecessary to archive everything, but for the lab it's ok :)

sh "tar --exclude='./.git' -zcvf /tmp/$BUILD_CONTEXT ."

sh "mv /tmp/$BUILD_CONTEXT ."

}

container(name: 'aws', shell: '/bin/bash') {

sh "aws s3 cp ${BUILD_CONTEXT} s3://${BUILD_CONTEXT_BUCKET}"

}

}

}

stage('Build image with Kaniko') {

agent {

kubernetes {

yaml """

apiVersion: v1

kind: Pod

metadata:

name: coolapppusher

labels:

robot: pusher

spec:

serviceAccount: my-release-jenkins-agent

containers:

- name: jnlp

- name: kaniko

image: gcr.io/kaniko-project/executor:v1.8.1-debug

imagePullPolicy: Always

command:

- /busybox/cat

tty: true

volumeMounts:

- name: docker-config

mountPath: /kaniko/.docker/

env:

- name: AWS_REGION

value: us-east-1

volumes:

- name: docker-config

configMap:

name: docker-config

"""

}

}

environment {

PATH = "/busybox:/kaniko:$PATH"

}

steps {

container(name: 'kaniko', shell: '/busybox/sh') {

sh '''#!/busybox/sh

/kaniko/executor -f `pwd`/Dockerfile -c `pwd` --context="s3://${BUILD_CONTEXT_BUCKET}/${BUILD_CONTEXT}" --verbosity debug --destination ${ECR_IMAGE}

'''

}

}

}

stage('Integration Test') {

agent {

kubernetes {

yaml """

apiVersion: v1

kind: Pod

metadata:

name: coolapptester

labels:

robot: tester

spec:

serviceAccount: my-release-jenkins-agent

containers:

- name: jnlp

- name: golang

image: golang

command:

- cat

tty: true

volumeMounts:

- name: kubeconfig

mountPath: /root/.kube

volumes:

- name: kubeconfig

secret:

secretName: kubeconfig

"""

}

}

environment {

TEST_NAMESPACE = "${BRANCH_NAME}.${BUILD_NUMBER}"

TEST_ACCOUNT_ID = "${ACCOUNT_ID}"

TEST_PREFIX = "${REPO_PREFIX}"

TEST_TAG = "${BRANCH_NAME}.${BUILD_NUMBER}"

}

steps {

container(name: 'golang', shell: '/bin/bash') {

dir("test") {

// get helm cli

sh 'curl -fsSL -o helm-v3.11.2-linux-amd64.tar.gz https://get.helm.sh/helm-v3.11.2-linux-amd64.tar.gz'

sh 'tar -zxvf helm-v3.11.2-linux-amd64.tar.gz'

sh 'chmod +x linux-amd64/helm'

sh 'mv linux-amd64/helm /usr/local/bin/helm'

// get dependencies

sh 'go mod tidy'

// run tests

sh 'go test ./ -v -short'

}

}

}

}

}

}Substitute the account id and repo prefix variables:

$ export ACCOUNT_ID=`aws sts get-caller-identity --query "Account" --output text`

$ sed -i -e "s/AWS_ACCOUNT_ID/${ACCOUNT_ID}/g" \

-e "s/AWS_REPO_PREFIX/${REPO_PREFIX}/g" JenkinsfileBefore pushing we need one final addition, as currently Terratest only supports kubeconfig authorization we need to generate one for our Jenkins Service Account.

First, create a Service Account token secret:

$ cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Secret

metadata:

name: my-release-jenkins-agent-secret

annotations:

kubernetes.io/service-account.name: my-release-jenkins-agent

type: kubernetes.io/service-account-token

EOFThen, use it to generate the Kubeconfig file:

$ cd ~/devops

$ secret="my-release-jenkins-agent-secret"

$ ca=$(kubectl get secret/$secret -o jsonpath='{.data.ca\.crt}')

$ token=$(kubectl get secret/$secret -o jsonpath='{.data.token}' | base64 --decode)

$ cat <<EOF >./config

apiVersion: v1

kind: Config

clusters:

- name: default-cluster

cluster:

certificate-authority-data: ${ca}

server: https://kubernetes

contexts:

- name: default-context

context:

cluster: default-cluster

namespace: default

user: default-user

current-context: default-context

users:

- name: default-user

user:

token: ${token}

EOFCreate a Kubernetes Secret from the Kubeconfig file to be able to access it in Jenkins.

$ cd ~/devops

$ kubectl create secret generic kubeconfig \

--from-file=./configFinally, push the application code to Cloud Source Repositories.

$ cd ~/devops/${YOUR_NAME}-jenkins

$ git add --all

$ git commit -a -m "Added Integration Test"

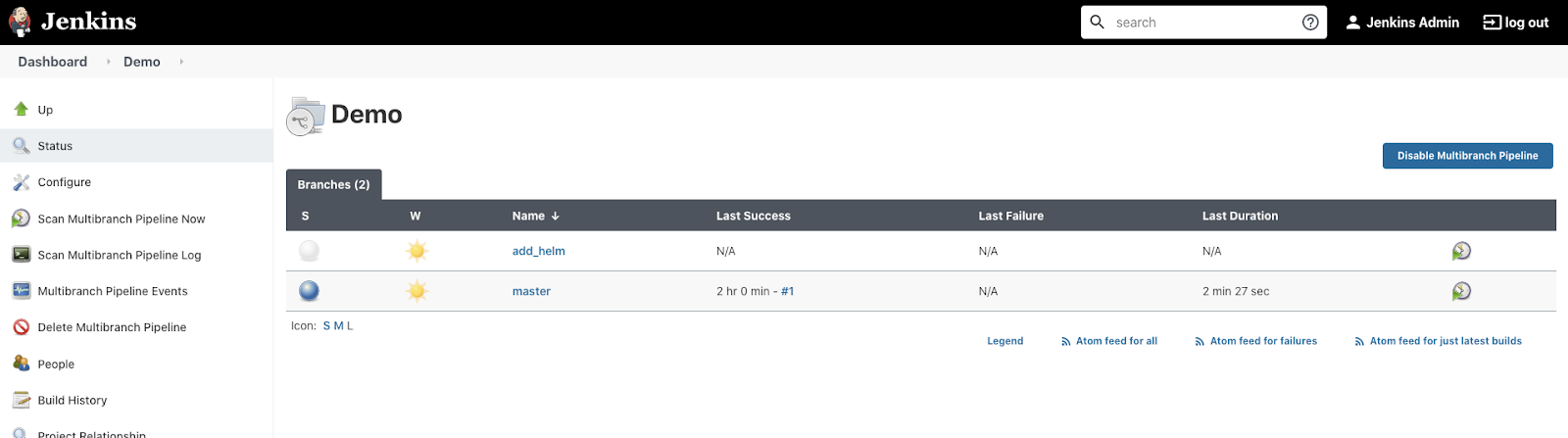

$ git push --set-upstream origin add_helmGo to the Jenkins Web Interface and click the Demo project. Wait up to 1 minute and check if the new branch is being tested.

Click on add_helm to see build details. After about 3-4 minutes the test should be finished.

Jenkinsfile review

Before we move on to the next step let's review the new Jenkinsfile configuration.

We've created a new stage, Integration Test, that has its agent configuration specified as a Pod manifest for Kubernetes plugin. We specify the service account to use with this agent Pod. Also we mount the kubeconfig secret to use with Terratest.

We have 2 containers needed for the job:

- Default Jenkins agent - jnlp

- golang - to test the application.

stage('Integration Test') {

agent {

kubernetes {

yaml """

apiVersion: v1

kind: Pod

metadata:

name: coolapptester

labels:

robot: tester

spec:

serviceAccount: my-release-jenkins-agent

containers:

- name: jnlp

- name: golang

image: golang

command:

- cat

tty: true

volumeMounts:

- name: kubeconfig

mountPath: /root/.kube

volumes:

- name: kubeconfig

secret:

secretName: kubeconfig

"""

}

}

steps {

...

}

}

}The stage's steps download the Helm binary and use Terratest to deploy and test our application inside the cluster.

steps {

container(name: 'golang', shell: '/bin/bash') {

dir("test") {

// get helm cli

sh 'curl -fsSL -o helm-v3.11.2-linux-amd64.tar.gz https://get.helm.sh/helm-v3.11.2-linux-amd64.tar.gz'

sh 'tar -zxvf helm-v3.11.2-linux-amd64.tar.gz'

sh 'chmod +x linux-amd64/helm'

sh 'mv linux-amd64/helm /usr/local/bin/helm'

// get dependencies

sh 'go mod tidy'

// run tests

sh 'go test ./ -v -short'

}

}When we are sure the app is working as intended, we can deploy it to "production".

Change to the project directory and edit Jenkinsfile:

$ cd ~/devops/${YOUR_NAME}-jenkins

$ vi JenkinsfilePaste the following (replace everything!):

pipeline {

agent none

environment {

ACCOUNT_ID = "AWS_ACCOUNT_ID"

REPO_PREFIX = "AWS_REPO_PREFIX"

BUILD_CONTEXT_BUCKET = "${REPO_PREFIX}-artifacts-jenkins"

BUILD_CONTEXT = "build-context-${BRANCH_NAME}.${BUILD_NUMBER}.tar.gz"

ECR_IMAGE = "${ACCOUNT_ID}.dkr.ecr.us-east-1.amazonaws.com/${REPO_PREFIX}-coolapp:${BRANCH_NAME}.${BUILD_NUMBER}"

}

stages {

stage('Test and Build the App') {

agent {

kubernetes {

yaml """

apiVersion: v1

kind: Pod

metadata:

name: coolappbuilder

labels:

robot: builder

spec:

serviceAccount: my-release-jenkins-agent

containers:

- name: jnlp

- name: golang

image: golang:1.15

command:

- cat

tty: true

- name: aws

image: amazon/aws-cli

command:

- /bin/bash

args:

- -c

- \"trap : TERM INT; sleep infinity & wait\"

"""

}

}

steps {

container(name: 'golang', shell: '/bin/bash') {

// run tests

sh 'go test ./ -v -short'

// generate artifact

sh 'CGO_ENABLED=0 GOOS=linux GOARCH=amd64 go build -a -ldflags \'-w -s -extldflags "-static"\' -o app .'

// archive the build context for kaniko.

// It's unnecessary to archive everything, but for the lab it's ok :)

sh "tar --exclude='./.git' -zcvf /tmp/$BUILD_CONTEXT ."

sh "mv /tmp/$BUILD_CONTEXT ."

}

container(name: 'aws', shell: '/bin/bash') {

sh "aws s3 cp ${BUILD_CONTEXT} s3://${BUILD_CONTEXT_BUCKET}"

}

}

}

stage('Build image with Kaniko') {

agent {

kubernetes {

yaml """

apiVersion: v1

kind: Pod

metadata:

name: coolapppusher

labels:

robot: pusher

spec:

serviceAccount: my-release-jenkins-agent

containers:

- name: jnlp

- name: kaniko

image: gcr.io/kaniko-project/executor:v1.8.1-debug

imagePullPolicy: Always

command:

- /busybox/cat

tty: true

volumeMounts:

- name: docker-config

mountPath: /kaniko/.docker/

env:

- name: AWS_REGION

value: us-east-1

volumes:

- name: docker-config

configMap:

name: docker-config

"""

}

}

environment {

PATH = "/busybox:/kaniko:$PATH"

}

steps {

container(name: 'kaniko', shell: '/busybox/sh') {

sh '''#!/busybox/sh

/kaniko/executor -f `pwd`/Dockerfile -c `pwd` --context="s3://${BUILD_CONTEXT_BUCKET}/${BUILD_CONTEXT}" --verbosity debug --destination ${ECR_IMAGE}

'''

}

}

}

stage('Integration Test') {

agent {

kubernetes {

yaml """

apiVersion: v1

kind: Pod

metadata:

name: coolapptester

labels:

robot: tester

spec:

serviceAccount: my-release-jenkins-agent

containers:

- name: jnlp

- name: golang

image: golang

command:

- cat

tty: true

volumeMounts:

- name: kubeconfig

mountPath: /root/.kube

volumes:

- name: kubeconfig

secret:

secretName: kubeconfig

"""

}

}

environment {

TEST_NAMESPACE = "${BRANCH_NAME}.${BUILD_NUMBER}"

TEST_ACCOUNT_ID = "${ACCOUNT_ID}"

TEST_PREFIX = "${REPO_PREFIX}"

TEST_TAG = "${BRANCH_NAME}.${BUILD_NUMBER}"

}

steps {

container(name: 'golang', shell: '/bin/bash') {

dir("test") {

// get helm cli

sh 'curl -fsSL -o helm-v3.11.2-linux-amd64.tar.gz https://get.helm.sh/helm-v3.11.2-linux-amd64.tar.gz'

sh 'tar -zxvf helm-v3.11.2-linux-amd64.tar.gz'

sh 'chmod +x linux-amd64/helm'

sh 'mv linux-amd64/helm /usr/local/bin/helm'

// get dependencies

sh 'go mod tidy'

// run tests

sh 'go test ./ -v -short'

}

}

}

}

stage('Wait for SRE Approval') {

when { branch 'master' }

steps {

timeout(time: 12, unit: 'HOURS') {

input message: 'Approve deployment?'

}

}

}

stage('Deploy to Production') {

when { branch 'master' }

agent {

kubernetes {

yaml """

apiVersion: v1

kind: Pod

metadata:

name: coolappdeployer

labels:

robot: deploy

spec:

serviceAccount: my-release-jenkins-agent

containers:

- name: jnlp

- name: helm

image: ubuntu

command:

- cat

tty: true

"""

}

}

environment {

TAG = "${BRANCH_NAME}.${BUILD_NUMBER}"

}

steps {

container(name: 'helm', shell: '/bin/bash') {

// get helm cli

sh 'apt-get update'

sh 'apt-get install -y curl'

sh 'curl -fsSL -o helm-v3.11.2-linux-amd64.tar.gz https://get.helm.sh/helm-v3.11.2-linux-amd64.tar.gz'

sh 'tar -zxvf helm-v3.11.2-linux-amd64.tar.gz'

sh 'chmod +x linux-amd64/helm'

sh 'mv linux-amd64/helm /usr/local/bin/helm'

// install

sh 'helm upgrade --install --wait coolapp ./coolapp/ --set accountId=${ACCOUNT_ID} --set prefix=${REPO_PREFIX} --set tag=${TAG} --set service.type=LoadBalancer'

}

}

}

}

}Substitute the account id and repo prefix variables:

$ export ACCOUNT_ID=`aws sts get-caller-identity --query "Account" --output text`

$ sed -i -e "s/AWS_ACCOUNT_ID/${ACCOUNT_ID}/g" \

-e "s/AWS_REPO_PREFIX/${REPO_PREFIX}/g" JenkinsfileFinally, push the application code to CodeCommit Repositories.

$ cd ~/devops/${YOUR_NAME}-jenkins

$ git add --all

$ git commit -a -m "Added Deployment"

$ git checkout master

$ git merge add_helm

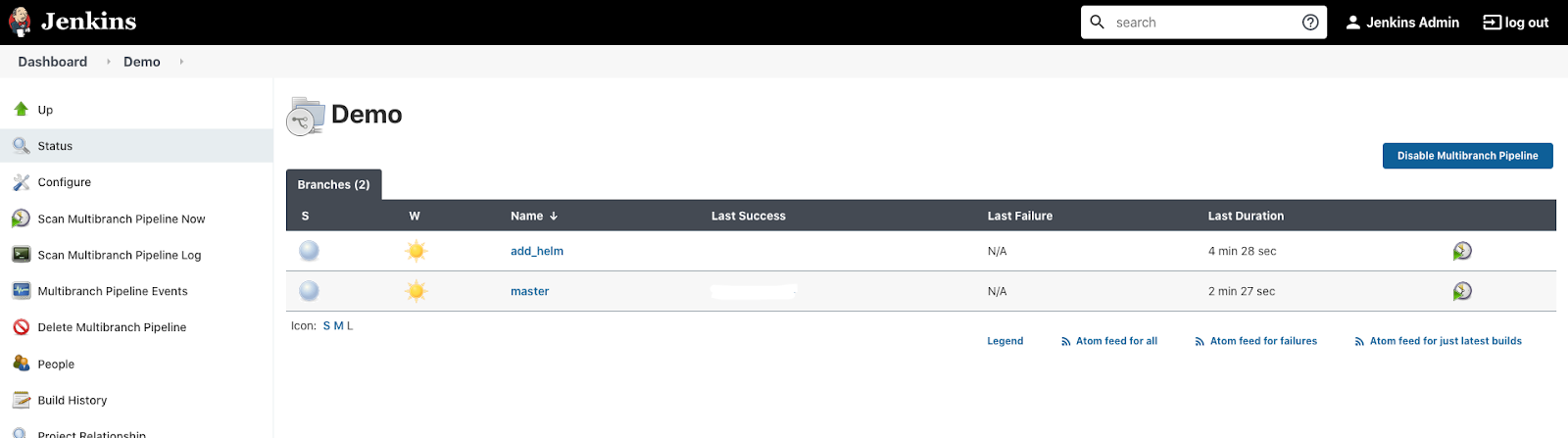

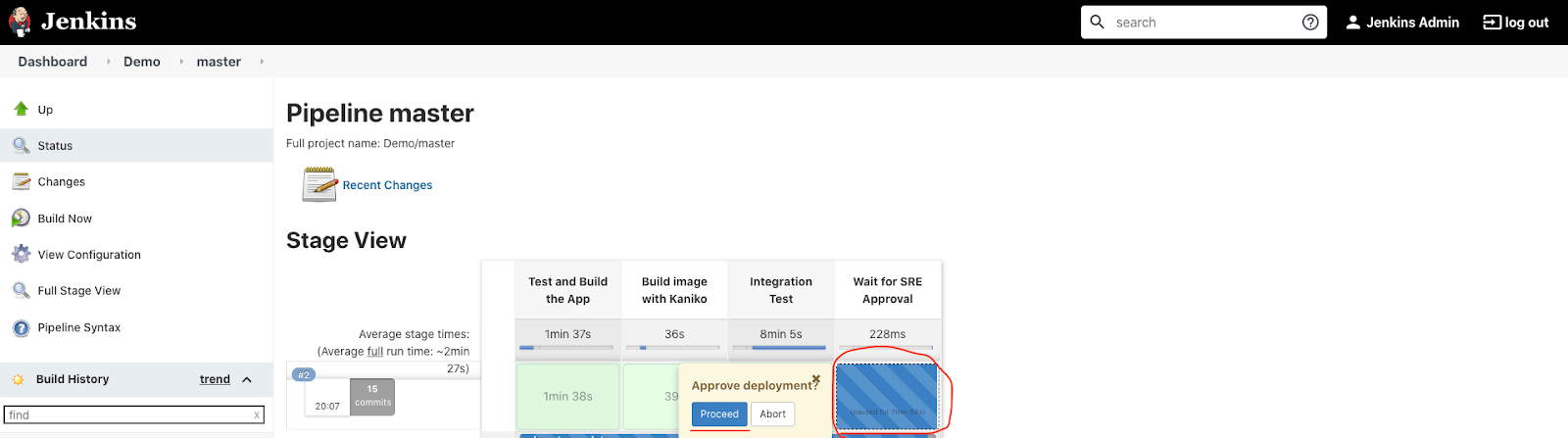

$ git push --allGo to the Jenkins Web Interface and click the Demo project. Wait a few seconds and check if both branches are being tested.

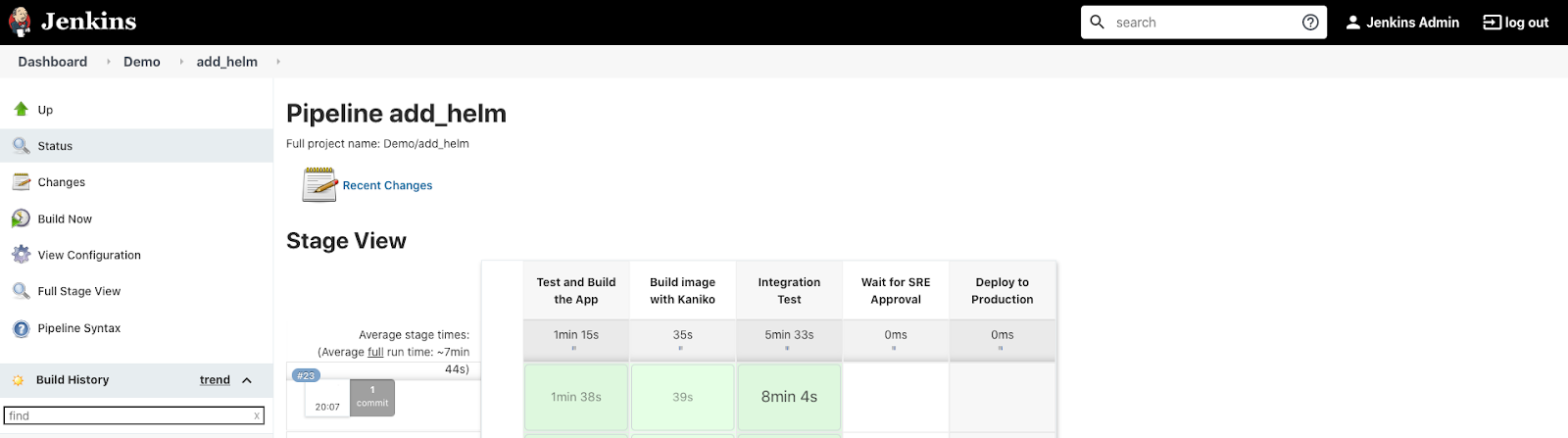

Wait for about 3-5 minutes and click the add_helm branch. You should see that there were only 3 steps executed.

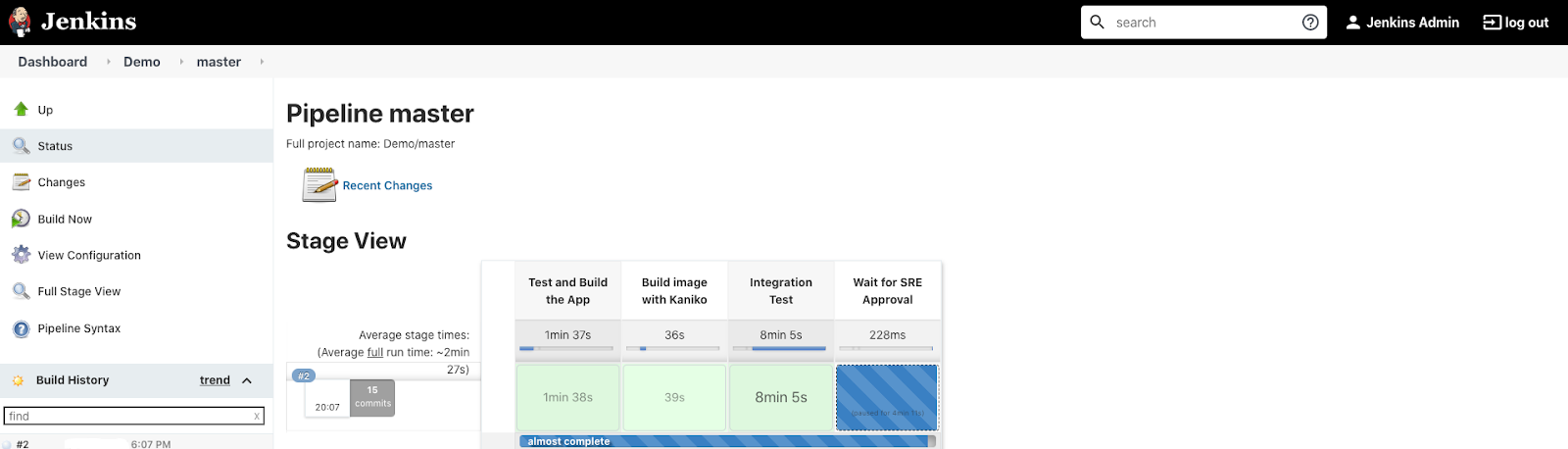

Go back to the Demo project and click the master branch. You should see that there is a pending stage.

Hover over the pending stage, you should see an approval pop up. Click Proceed.

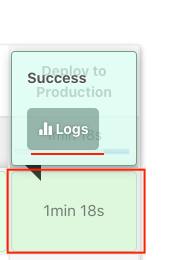

You should see a new stage start. Wait till the application is deployed. Hover over the 'Deploy to production' stage and click Logs.

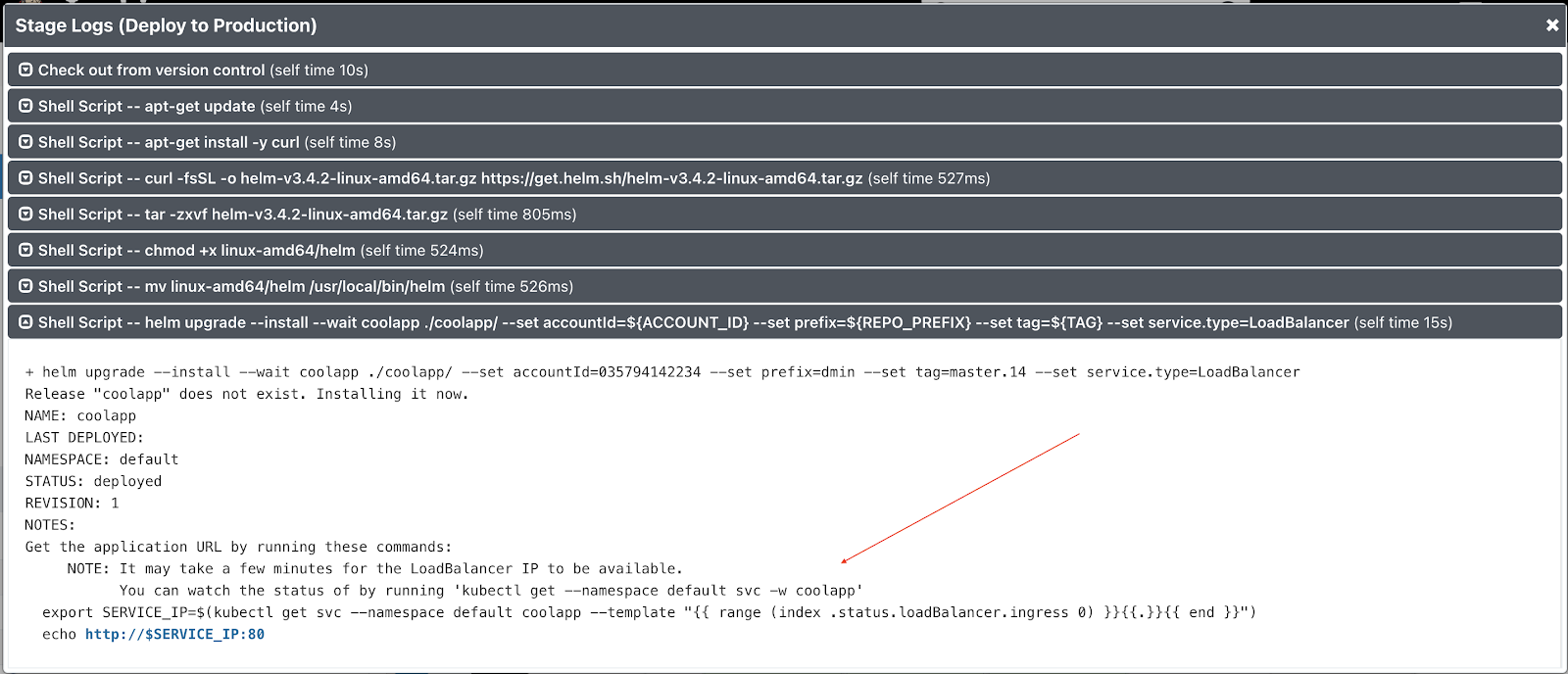

You should see every command executed during the last stage. Open the very last step (with helm upgrade ...).

Follow the printed Helm instructions from console output to verify that that application is working.

Jenkinsfile review

Let's review the final Jenkinsfile configuration.

We've created two new stages, Wait for SRE Approval and Deploy to Production. The latter has its agent configuration specified as a Pod manifest for Kubernetes plugin. We specify the service account to use with this agent Pod.

We have 2 containers needed for the job:

- Default Jenkins agent - jnlp

- ubuntu - to install Helm binary and deploy the application.

stage('Deploy to Production') {

when { branch 'master' }

agent {

kubernetes {

yaml """

apiVersion: v1

kind: Pod

metadata:

name: coolappdeployer

labels:

robot: deploy

spec:

serviceAccount: my-release-jenkins-agent

containers:

- name: jnlp

- name: helm

image: ubuntu

command:

- cat

tty: true

"""

}

}

steps {

...

}

}

}The stage's steps download the Helm binary and use it to deploy our application inside the default namespace of the cluster. It also configures the service to run as a LoadBalancer type.

steps {

container(name: 'helm', shell: '/bin/bash') {

// get helm cli

sh 'apt-get update'

sh 'apt-get install -y curl'

sh 'curl -fsSL -o helm-v3.11.2-linux-amd64.tar.gz https://get.helm.sh/helm-v3.11.2-linux-amd64.tar.gz'

sh 'tar -zxvf helm-v3.11.2-linux-amd64.tar.gz'

sh 'chmod +x linux-amd64/helm'

sh 'mv linux-amd64/helm /usr/local/bin/helm'

// install

sh 'helm upgrade --install --wait coolapp ./coolapp/ --set accountId=${ACCOUNT_ID} --set prefix=${REPO_PREFIX} --set tag=${TAG} --set service.type=LoadBalancer'

}

}But before we launch the final stage, we wait for manual approval:

stage('Wait for SRE Approval') {

when { branch 'master' }

steps{

timeout(time:12, unit:'HOURS') {

input message:'Approve deployment?'

}

}

}Both of these stages have a condition when { branch 'master' } to trigger only for master branch.

Delete the ECR repository:

$ aws ecr delete-repository \

--repository-name ${REPO_PREFIX}-coolapp \

--forceDelete the S3 Artifact archive:

$ aws s3 rb s3://${REPO_PREFIX}-artifacts-jenkins --forceDelete the IAM User:

$ export POLICY_ARN=$(aws iam list-policies --query 'Policies[?PolicyName==`AWSCodeCommitPowerUser`].{ARN:Arn}' --output text)

$ aws iam detach-user-policy --user-name Training-${YOUR_NAME}-jenkins --policy-arn $POLICY_ARN

$ aws iam delete-ssh-public-key --user-name Training-${YOUR_NAME}-jenkins --ssh-public-key-id $(aws iam list-ssh-public-keys --user-name Training-${YOUR_NAME}-jenkins | jq -r .SSHPublicKeys[].SSHPublicKeyId)

$ aws iam delete-user --user-name Training-${YOUR_NAME}-jenkinsDelete the CodeCommit Repository:

$ aws codecommit delete-repository --repository-name ${YOUR_NAME}-jenkinsTo delete the cluster execute the following command.

$ kops delete cluster --name $NAME --yesWhen the cluster is removed - delete the bucket.

$ aws s3api delete-bucket \

--bucket ${STATE_BUCKET} \

--region ${REGION}When the cluster and images are removed - manually terminate the kops instance.

Thank you! :)