Last Updated: 2023-02-03

HashiCorp Terraform

Terraform is a tool for building, changing, and versioning infrastructure safely and efficiently. Terraform can manage existing and popular service providers as well as custom in-house solutions. Terraform can provision infrastructure across many different types of cloud providers, including AWS, Azure, Google Cloud, DigitalOcean, and many others.

Configuration files describe to Terraform the components needed to run a single application or your entire datacenter. Terraform generates an execution plan describing what it will do to reach the desired state, and then executes it to build the described infrastructure. As the configuration changes, Terraform is able to determine what changed and create incremental execution plans which can be applied.

The infrastructure Terraform can manage includes low-level components such as compute instances, storage, and networking, as well as high-level components such as DNS entries, SaaS features, etc.

What you'll build

In this codelab, you're going to use Terraform to automate infrastructure deployment.

What you'll need

- A recent version of your favourite Web Browser

- Basics of BASH

- Cloud Shell in our AWS Account

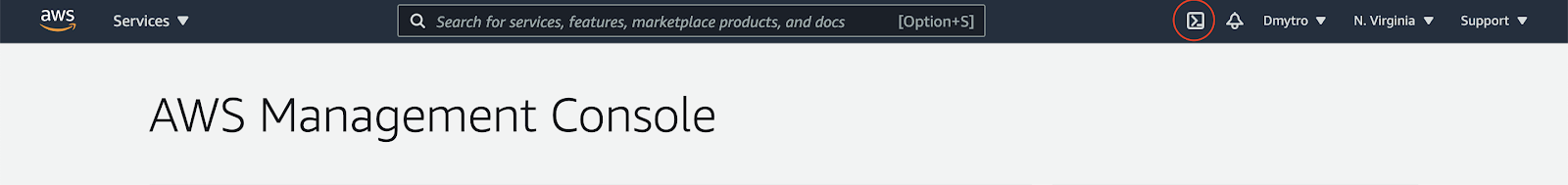

Open CloudShell

You will do most of the work from the Amazon Cloud Shell, a command line environment running in the Cloud. This virtual machine is loaded with all the development tools you'll need (aws cli, python) and offers a persistent 1GB home directory and runs in AWS, greatly enhancing network performance and authentication. Open the Amazon Cloud Shell by clicking on the icon on the top right of the screen:

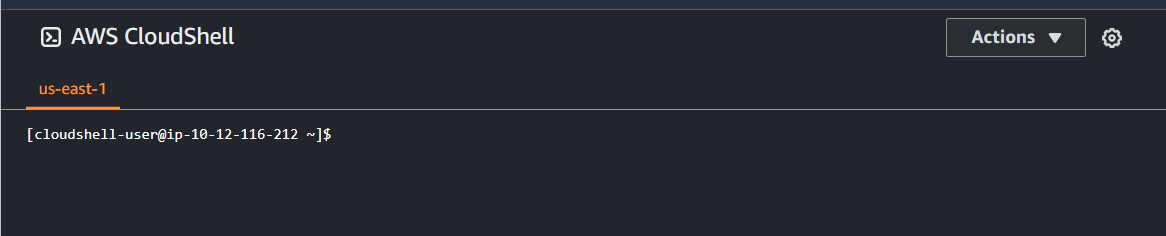

You should see the shell prompt open in the new tab:

Initial setup

Install Terraform:

$ sudo yum install -y yum-utils

$ sudo yum-config-manager --add-repo \

https://rpm.releases.hashicorp.com/AmazonLinux/hashicorp.repo

$ sudo yum -y install terraform gitEnable Terraform autocomplete to help you write commands faster and add syntax highlight to Vim:

$ terraform -install-autocomplete

$ git clone https://github.com/hashivim/vim-terraform.git \

~/.vim/pack/plugins/start/vim-terraformCredentials

In real world scenario you will need a limited user account with only necessary permissions for this lab (that are still somewhat more permissive, than they should be in production):

- AmazonEC2FullAccess

- AmazonS3FullAccess

- AmazonDynamoDBFullAccess

You can create this account with programmatic access at Identity and Access Management (IAM) console. Then, after receiving your user credentials you should set them up in the terminal, so Terraform can use them to manage infrastructure:

$ export AWS_ACCESS_KEY_ID=(your access key id)

$ export AWS_SECRET_ACCESS_KEY=(your secret access key)Create basic EC2 instance

Terraform code is written in a language called HCL in files with the extension .tf. It is a declarative language, so your goal is to describe the infrastructure you want, and Terraform will figure out how to create it. Terraform can create infrastructure across a wide variety of platforms, or in its terminology - providers, including AWS, Azure, Google Cloud, DigitalOcean, and many others.

The general HCL syntax for a Terraform resource is:

resource "<PROVIDER>_<TYPE>" "<NAME>" {

[CONFIG ...]

}Where PROVIDER is the name of a provider, TYPE is the type of resources to create in that provider, NAME is an identifier you can use throughout the Terraform code to refer to this resource, and CONFIG consists of one or more arguments that are specific to that resource.

You can write Terraform code in just about any text editor. If you search around, you can find Terraform syntax highlighting support for most editors (note, you may have to search for the word "HCL" instead of "Terraform"), including vim, emacs, Visual Studio Code, and IntelliJ (the latter even has support for refactoring, find usages, and go to declaration).

Create and change to the Demo directory:

$ mkdir -p ~/terraform-demo/basic

$ cd ~/terraform-demo/basicThe first step to using Terraform is typically to configure the provider(s) you want to use. Create a file called main.tf:

$ vi main.tfPut the following code in it:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 4.16"

}

}

required_version = ">= 1.2.0"

}

provider "aws" {

region = "us-east-1"

}This tells Terraform that you are going to be using the AWS provider and that you wish to deploy your infrastructure in the us-east-1 region.

You can configure other settings for the AWS provider, but for this lab, since you're using Cloud Shell, you only need to specify the region.

For each provider, there are many different kinds of resources you can create, such as servers, databases, and load balancers. Let's first figure out how to deploy a single server that will host a static website. Add the following code to main.tf, which uses the aws_instance resource to deploy an EC2 Instance:

resource "aws_instance" "example" {

ami = "ami-007855ac798b5175e"

instance_type = "t2.micro"

tags = {

Name = "ExampleAppServerInstance"

}

}For the aws_instance resource, there are many different arguments, but for now, you only need to set the following ones:

ami: The Amazon Machine Image (AMI) to run on the EC2 Instance. You can find free and paid AMIs in the AWS Marketplace. The preceding code sets the ami parameter to the ID of an Ubuntu AMI in us-east-1. The AMI IDs are different for every region and change when the image is updated. Later we'll see how to find them dynamically.instance_type: The type of EC2 Instance to run.

Now, save the file and run:

$ terraform init

Initializing the backend...

Initializing provider plugins...

- Finding latest version of hashicorp/aws...

- Installing hashicorp/aws v3.9.1...

- Installed hashicorp/aws v3.9.1 (signed by HashiCorp)

(...)

* hashicorp/aws: version = "~> 3.9.1"

Terraform has been successfully initialized!The terraform binary contains the basic functionality for Terraform, but it does not come with the code for any of the providers, so when first starting to use Terraform, you need to run terraform init to tell Terraform to scan the code, figure out what providers you're using, and download the code for them. By default, the provider code will be downloaded into a .terraform folder, which is Terraform's scratch directory (you may want to add it to .gitignore). You'll see a few other uses for the init command and .terraform folder later on.

For now, just be aware that you need to run init any time you start with new Terraform code, and that it's safe to run init multiple times (the command is idempotent).

Now that you have the provider code downloaded, run the terraform plan command:

$ terraform plan

Refreshing Terraform state in-memory prior to plan...

(...)

Terraform will perform the following actions:

# aws_instance.example will be created

+ resource "aws_instance" "example" {

+ ami = "ami-007855ac798b5175e"

+ arn = (known after apply)

+ associate_public_ip_address = (known after apply)

+ availability_zone = (known after apply)

+ cpu_core_count = (known after apply)

+ cpu_threads_per_core = (known after apply)

+ get_password_data = false

+ host_id = (known after apply)

+ id = (known after apply)

+ instance_state = (known after apply)

+ instance_type = "t2.micro"

+ ipv6_address_count = (known after apply)

+ ipv6_addresses = (known after apply)

+ key_name = (known after apply)

+ outpost_arn = (known after apply)

+ password_data = (known after apply)

+ placement_group = (known after apply)

+ primary_network_interface_id = (known after apply)

+ private_dns = (known after apply)

+ private_ip = (known after apply)

+ public_dns = (known after apply)

+ public_ip = (known after apply)

+ secondary_private_ips = (known after apply)

+ security_groups = (known after apply)

+ source_dest_check = true

+ subnet_id = (known after apply)

+ tenancy = (known after apply)

+ volume_tags = (known after apply)

+ vpc_security_group_ids = (known after apply)

(...)

Plan: 1 to add, 0 to change, 0 to destroy.The plan command lets you see what Terraform will do before actually doing it. This is a great way to sanity check your changes before unleashing them onto the world. The output of the plan command is a little like the output of the diff command: resources with a plus sign (+) are going to be created, resources with a minus sign (-) are going to be deleted, and resources with a tilde sign (~) are going to be modified in-place. In the output above, you can see that Terraform is planning on creating a single EC2 Instance and nothing else, which is exactly what we want.

To actually create the instance, run the terraform apply command:

$ terraform apply

(...)

Terraform will perform the following actions:

(...)

Plan: 1 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value:You'll notice that the apply command shows you the same plan output and asks you to confirm if you actually want to proceed with this plan. So while plan is available as a separate command, it's mainly useful for quick sanity checks and during code reviews, and most of the time you'll run apply directly and review the plan output it shows you.

Type in "yes" and hit enter to deploy the EC2 Instance:

aws_instance.example: Creating...

aws_instance.example: Still creating... [10s elapsed]

aws_instance.example: Still creating... [20s elapsed]

aws_instance.example: Still creating... [30s elapsed]

aws_instance.example: Creation complete after 32s [id=...]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.Now, your instance is deployed with Terraform! To verify this, you can login to the EC2 console.

You will see that It's working, but the Instance doesn't have a name. To add one, you can add a tag to the EC2 instance. Modify the main.tf file:

$ vi main.tfAnd make it look like this:

resource "aws_instance" "example" {

ami = "ami-007855ac798b5175e"

instance_type = "t2.micro"

tags = {

Name = "ec2-example"

}

}Run terraform apply again to see what this would do:

$ terraform apply

aws_instance.example: Refreshing state... [id=...]

(...)

Terraform will perform the following actions:

# aws_instance.example will be updated in-place

~ resource "aws_instance" "example" {

ami = "ami-007855ac798b5175e"

arn = "arn:aws:ec2:us-east-1:716651504771:instance/i-066efb0383709ee2b"

associate_public_ip_address = true

availability_zone = "us-east-1a"

(...)

~ tags = {

+ "Name" = "ec2-example"

}

(...)

}

(...)

Plan: 0 to add, 1 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value:Terraform keeps track of all the resources it already created for this set of configuration files, so it knows your EC2 Instance already exists (notice Terraform says "Refreshing state..." when you run the apply command), and it can show you a diff between what's currently deployed and what's in your Terraform code (this is one of the advantages of using a declarative language over a procedural one). The preceding diff shows that Terraform wants to create a single tag called "Name," which is exactly what you need, so type in "yes" and hit enter.

Refresh your EC2 console to see the change.

Configuring EC2 Instance

Let's configure the EC2 instance to run a simple Apache2 web server. We'll use a simple script to do this. Create a file in the same directory as main.tf and call it data.sh:

$ cd ~/terraform-demo/basic

$ vi data.shPaste the following content:

#!/bin/bash

sudo apt-get update

sudo apt-get install -y apache2

echo "Hello, World!" | sudo tee /var/www/html/index.html

sudo systemctl restart apache2Your directory structure should look like this:

/home/cloudshell-user/terraform-demo/

└── basic

├── data.sh

├── main.tfNormally, instead of using an empty Amazon AMI, you would use some tool (e.g., Packer) to create a custom AMI that has the web server installed on it. But we're going to run the script above as part of the EC2 Instance's User Data, which AWS will execute when the instance is booting. Modify main.tf:

$ vi main.tfAnd replace the instance configuration with the following code:

resource "aws_instance" "example" {

ami = "ami-007855ac798b5175e"

instance_type = "t2.micro"

user_data = file("data.sh")

tags = {

Name = "ec2-example"

}

}file is a Terraform's function, which is part of its syntax, read contents of a file.

You need to do one more thing before this web server works. By default, AWS does not allow any incoming or outgoing traffic from an EC2 Instance. To allow the EC2 Instance to receive traffic on port 80, you need to create a security group. Add another resource to main.tf:

resource "aws_security_group" "example" {

name = "terraform-example-sg"

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}This code creates a new resource called aws_security_group and specifies that this group allows incoming TCP requests on port 80 from the CIDR block 0.0.0.0/0 and allows outgoing requests to any address.

Simply creating a security group isn't enough; you also need to tell the EC2 Instance to actually use it by passing the ID of the security group into the vpc_security_group_ids argument of the aws_instance resource. To do that, you can use Terraform expressions.

An expression in Terraform is anything that returns a value. One particularly useful type of expression is a reference, which allows you to access values from other parts of your code. To access the ID of the security group resource, you are going to need to use a resource attribute reference, which uses the following syntax:

<PROVIDER>_<TYPE>.<NAME>.<ATTRIBUTE>Where PROVIDER is the name of the provider (e.g., aws), TYPE is the type of resource (e.g., security_group), NAME is the name of that resource (e.g., the security group is named "example"), and ATTRIBUTE is either one of the arguments of that resource (e.g., name) or one of the attributes exported by the resource (you can find the list of available attributes in the documentation for each resource—e.g., here are the attributes for aws_security_group). The security group exports an attribute called id, so the expression to reference it will look like this:

aws_security_group.example.idYou can use this security group ID in the vpc_security_group_ids parameter of the aws_instance:

resource "aws_instance" "example" {

ami = "ami-007855ac798b5175e"

instance_type = "t2.micro"

vpc_security_group_ids = [aws_security_group.example.id]

user_data = file("data.sh")

tags = {

Name = "ec2-example"

}

}When you add a reference from one resource to another, you create an implicit dependency. Terraform parses these dependencies, builds a dependency graph from them, and uses that to automatically figure out in what order it should create resources. For example, if you were to deploy this code from scratch, Terraform would know it needs to create the security group before the EC2 Instance, since the EC2 Instance references the ID of the security group.

When Terraform walks your dependency tree, it will create as many resources in parallel as it can, which means it can apply your changes fairly efficiently. That's the beauty of a declarative language: you just specify what you want and Terraform figures out the most efficient way to make it happen.

If you run the apply command, you'll see that Terraform wants to add a security group and replace the EC2 Instance with a new Instance that has the new user data:

$ terraform apply

aws_instance.example: Refreshing state... [id=...]

(...)

Terraform will perform the following actions:

# aws_instance.example must be replaced

-/+ resource "aws_instance" "example" {

ami = "ami-007855ac798b5175e"

arn = "arn:aws:ec2:us-east-1:716651504771:instance/i-066efb0383709ee2b"

associate_public_ip_address = true

availability_zone = "us-east-1a"

(...)

+ user_data = "d71355ea4ffb..." # forces replacement

~ volume_tags = {} -> (known after apply)

~ vpc_security_group_ids = [

- "sg-01b2603a",

] -> (known after apply)

(...)

}

(...)

Plan: 2 to add, 0 to change, 1 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value:The -/+ in the plan output means "replace"; look for the text "forces replacement" to figure out what is forcing Terraform to do a replacement. With EC2 Instances, changes to many attributes will force the original Instance to be terminated and a completely new Instance to be created (this is an example of the immutable infrastructure paradigm).

Since the plan looks good, enter "yes" and you'll see your new EC2 Instance deploying.

In the description panel at the bottom of the screen, you'll also see the public IP address of this EC2 Instance. Give it a minute or two to boot up and then use a web browser or a tool like curl to make an HTTP request to this IP address at port 80 to see an welcome Apache2 page:

$ curl http://<EC2_INSTANCE_PUBLIC_IP>:80

Hello, World!Parameterized Configuration

Our current Terraform code has the port 80 hardcoded in the security group configuration. In real world scenarios you'll probably use that port number in application configuration too. If you have the port number copy/pasted in two places, it's too easy to update it in one place but forget to make the same change in the other place.

To allow you to make your code more configurable, Terraform allows you to define input variables. The syntax for declaring a variable is:

variable "NAME" {

[CONFIG ...]

}The body of the variable declaration can contain three parameters, all of them optional:

description: It's always a good idea to use this parameter to document how a variable is used. Your teammates will not only be able to see this description while reading the code, but also when running theplanorapplycommands.default: There are a number of ways to provide a value for the variable, including passing it in at the command line (using the-varoption), via a file (using the-var-fileoption), or via an environment variable (Terraform looks for environment variables of the nameTF_VAR_). If no value is passed in, the variable will fall back to this default value. If there is no default value, Terraform will interactively prompt the user for one.type: This allows you to enforce type constraints on the variables a user passes in. Terraform supports a number of type constraints, includingstring, number, bool, list, map, set, object, tuple, andany. If you don't specify a type, Terraform assumes the type isany.

For the web server example, here is how you can create a variable that stores the port number. Create a file called variables.tf (the file can be arbitrary named or the code can be even inside main.tf, terraform will parse every .tf file in the current directory before doing anything).

$ vi variables.tfAnd paste the following:

variable "server_port" {

description = "The port the server will use for HTTP requests"

type = number

}Note that the server_port input variable has no default, so if you run the apply command now, Terraform will interactively prompt you to enter a value for server_port and show you the description of the variable:

$ terraform apply

var.server_port

The port the server will use for HTTP requests

Enter a value:If you don't want to deal with an interactive prompt, you can provide a value for the variable via the -var command-line option:

$ terraform apply -var "server_port=80"You could also set the variable via an environment variable named TF_VAR_ where

$ export TF_VAR_server_port=80

$ terraform applyAnd if you don't want to deal with remembering extra command-line arguments every time you run plan or apply, you can specify a default value in variables.tf:

variable "server_port" {

description = "The port the server will use for HTTP requests"

type = number

default = 80

}To use the value from an input variable in your Terraform code, you can use a new type of expression called a variable reference, which has the following syntax:

var.<VARIABLE_NAME>For example, here is how you can set the from_port and to_port parameters of the security group to the value of the server_port variable. Edit main.tf:

$ vi main.tfAnd modify the Security Group resource:

resource "aws_security_group" "example" {

name = "terraform-example-sg"

ingress {

from_port = var.server_port

to_port = var.server_port

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}We can use the same variable when setting, for example, the name of the EC2 instance so we know that it is used for a web server. To use a reference inside of a string literal, you need to use a new type of expression called an interpolation, which has the following syntax:

"${...}"You can put any valid reference within the curly braces and Terraform will convert it to a string. For example, here's how you can use var.server_port inside instance name string:

resource "aws_instance" "example" {

ami = "ami-007855ac798b5175e"

instance_type = "t2.micro"

vpc_security_group_ids = [aws_security_group.example.id]

user_data = file("data.sh")

tags = {

Name = "ec2-example-${var.server_port}"

}

}In addition to input variables, Terraform also allows you to define output variables with the following syntax:

output "<NAME>" {

value = <VALUE>

[CONFIG ...]

}The NAME is the name of the output variable and VALUE can be any Terraform expression that you would like to output. The CONFIG can contain two additional parameters, both optional:

description: It's always a good idea to use this parameter to document what type of data is contained in the output variable.sensitive: Set this parameter totrueto tell Terraform not to log this output at the end ofterraform apply. This is useful if the output variable contains sensitive material or secrets, such as passwords or private keys.

For example, instead of having to manually poke around the EC2 console to find the IP address of your server, you can provide the IP address as an output variable. Create an outputs.tf file

$ vi outputs.tfAnd paste the following:

output "public_ip" {

value = aws_instance.example.public_ip

description = "The public IP of the web server"

}Your directory structure should look like this:

/home/cloudshell-user/terraform-demo/

└── basic

├── data.sh

├── main.tf

├── outputs.tf

├── terraform.tfstate

├── terraform.tfstate.backup

└── variables.tf

This code uses an attribute reference again, this time referencing the public_ip attribute of the aws_instance resource. If you run the apply command again, Terraform will apply any changes (since we changed the instance name), and will show you the new output at the very end:

$ terraform apply

(...)

aws_instance.example: Modifying... [id=i-...]

aws_instance.example: Modifications complete after 1s [id=...]

Apply complete! Resources: 0 added, 1 changed, 0 destroyed.

Outputs:

public_ip = x.x.x.xAs you can see, output variables show up in the console after you run terraform apply, which users of your Terraform code may find useful (e.g., you now know what IP to test once the web server is deployed). You can also use the terraform output command to list all outputs without applying any changes:

$ terraform output

public_ip = x.x.x.xAnd you can run terraform output to see the value of a specific output called

$ terraform output public_ip

x.x.x.xThis is particularly handy for scripting. For example, you could create a deployment script that runs terraform apply to deploy the web server, uses terraform output public_ip to grab its public IP, and runs curl on the IP as a quick smoke test to validate that the deployment worked.

Input and output variables are also essential ingredients in creating configurable and reusable infrastructure code.

Create AutoScaling Group

The first step in creating an ASG is to create a launch configuration, which specifies how to configure each EC2 Instance in the ASG. From deploying the single EC2 Instance earlier, you already know exactly how to configure it, and you can reuse almost exactly the same parameters in the aws_launch_configuration resource. Add one to your main.tf:

$ vi main.tfAnd add the following:

resource "aws_launch_configuration" "example" {

image_id = "ami-007855ac798b5175e"

instance_type = "t2.micro"

security_groups = [aws_security_group.example.id]

user_data = file("data.sh")

lifecycle {

create_before_destroy = true

}

}The only new thing here is the lifecycle setting. Terraform supports several lifecycle settings that let you customize how resources are created and destroyed. The create_before_destroy setting controls the order in which resources are recreated. The default order is to delete the old resource and then create the new one. Setting create_before_destroy to true reverses this order, creating the replacement first, and then deleting the old one. Since every change to a launch configuration creates a totally new launch configuration, you need this setting to ensure that the new configuration is created first, so any ASGs using this launch configuration can be updated to point to the new one, and then the old one can be deleted.

Now you can create the ASG itself using the aws_autoscaling_group resource:

resource "aws_autoscaling_group" "example" {

launch_configuration = aws_launch_configuration.example.id

min_size = 2

max_size = 10

tag {

key = "Name"

value = "terraform-asg-example"

propagate_at_launch = true

}

}This ASG will run between 2 and 10 EC2 Instances (defaulting to 2 for the initial launch), each tagged with the name "terraform-asg-example". The ASG uses a reference to fill in the launch configuration name.

To make this ASG work, you need to specify one more parameter: availability_zones. This parameter specifies into which availability zones (AZs) the EC2 Instances should be deployed.

You could hard-code the list of AZs (e.g. set it to ["us-east-1a", "us-east-1b"]), but that won't be maintainable or portable (e.g., each AWS account has access to a slightly different set of AZs), so a better option is to use data sources to get the list of subnets in your AWS account.

A data source represents a piece of read-only information that is fetched from the provider (in this case, AWS) every time you run Terraform. Adding a data source to your Terraform configurations does not create anything new; it's just a way to query the provider's APIs for data and to make that data available to the rest of your Terraform code. Each Terraform provider exposes a variety of data sources. For example, the AWS provider includes data sources to look up VPC data, subnet data, AMI IDs, IP address ranges, the current user's identity, and much more.

The syntax for using a data source is very similar to the syntax of a resource:

data "<PROVIDER>_<TYPE>" "<NAME>" {

[CONFIG ...]

}PROVIDER is the name of a provider (e.g., aws), TYPE is the type of data source you want to use (e.g., vpc), NAME is an identifier you can use throughout the Terraform code to refer to this data source, and CONFIG consists of one or more arguments that are specific to that data source. For example, here is how you can use the aws_availability_zones data source to fetch the list of AZs in your AWS account. Add this to your main.tf:

data "aws_availability_zones" "all" {}To get the data out of a data source, you use the following attribute reference syntax:

data.<PROVIDER>_<TYPE>.<NAME>.<ATTRIBUTE>For example, to get the list of AZ names from the aws_availability_zones data source, you would use the following:

data.aws_availability_zones.all.namesUse this value to set the availability_zone argument of your aws_autoscaling_group resource:

resource "aws_autoscaling_group" "example" {

launch_configuration = aws_launch_configuration.example.id

availability_zones = data.aws_availability_zones.all.names

min_size = 2

max_size = 10

tag {

key = "Name"

value = "terraform-asg-example"

propagate_at_launch = true

}

}Create Load Balancer

At this point, you can deploy your ASG, but you'll have a small problem: you'll need to manually create a load balancer to use your new ASG. But we can fix it with Terraform.

AWS offers three different types of load balancers:

- Application Load Balancer (ALB): best suited for HTTP and HTTPS traffic.

- Network Load Balancer (NLB): best suited for TCP and UDP traffic.

- Classic Load Balancer (CLB): this is the "legacy" load balancer that predates both the ALB and NLB. It can do HTTP, HTTPS, and TCP, but offers far fewer features than the ALB or NLB.

Since our web servers use HTTP, the ALB would be the best fit, but it requires more code and more explanation, so to keep this short, we're going to use the CLB, which is simpler to use.

You can create a CLB using the aws_elb resource in main.tf:

resource "aws_elb" "example" {

name = "terraform-asg-example"

availability_zones = data.aws_availability_zones.all.names

}This creates an ELB that will be deployed across all of the AZs in your account.

Note that the aws_elb code above doesn't do much until you tell the CLB how to route requests. To do that, you add one or more listeners which specify what port the CLB should listen on and what port it should route the request to:

resource "aws_elb" "example" {

name = "terraform-asg-example"

availability_zones = data.aws_availability_zones.all.names

# This adds a listener for incoming HTTP requests.

listener {

lb_port = 80

lb_protocol = "http"

instance_port = var.server_port

instance_protocol = "http"

}

}In the code above, we are telling the CLB to receive HTTP requests on port 80 and to route them to the port used by the Instances in the ASG. Note that, by default, CLBs don't allow any incoming or outgoing traffic (just like EC2 Instances), so you need to add a new security group to explicitly allow inbound requests on port 80 and all outbound requests (the latter is to allow the CLB to perform health checks):

resource "aws_security_group" "elb" {

name = "terraform-example-elb"

# Allow all outbound

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

# Inbound HTTP from anywhere

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

}You now need to tell the CLB to use this security group by adding the security_groups parameter:

resource "aws_elb" "example" {

name = "terraform-asg-example"

security_groups = [aws_security_group.elb.id]

availability_zones = data.aws_availability_zones.all.names

# This adds a listener for incoming HTTP requests.

listener {

lb_port = 80

lb_protocol = "http"

instance_port = var.server_port

instance_protocol = "http"

}

}The CLB can periodically check the health of your EC2 Instances and, if an instance is unhealthy, it will automatically stop routing traffic to it. Let's add an HTTP health check where the CLB will send an HTTP request every 30 seconds to the "/" URL of each of the EC2 Instances and only mark an Instance as healthy if it responds with a 200 OK:

resource "aws_elb" "example" {

name = "terraform-asg-example"

security_groups = [aws_security_group.elb.id]

availability_zones = data.aws_availability_zones.all.names

health_check {

target = "HTTP:${var.server_port}/"

interval = 30

timeout = 3

healthy_threshold = 2

unhealthy_threshold = 2

}

# This adds a listener for incoming HTTP requests.

listener {

lb_port = 80

lb_protocol = "http"

instance_port = var.server_port

instance_protocol = "http"

}

}How does the CLB know which EC2 Instances to send requests to? You can attach a static list of EC2 Instances to an ELB using the CLB's instances parameter, but with an ASG, Instances will be launching and terminating dynamically all the time, so that won't work. Instead, you can use the load_balancers parameter of the aws_autoscaling_group resource to tell the ASG to register each Instance in the CLB:

resource "aws_autoscaling_group" "example" {

launch_configuration = aws_launch_configuration.example.id

availability_zones = data.aws_availability_zones.all.names

min_size = 2

max_size = 10

load_balancers = [aws_elb.example.name]

health_check_type = "ELB"

tag {

key = "Name"

value = "terraform-asg-example"

propagate_at_launch = true

}

}Notice that we've also configured the health_check_type for the ASG to "ELB". The default health_check_type is "EC2", which is a minimal health check that only considers Instance unhealthy if the AWS hypervisor says the server is completely down or unreachable. The "ELB" health check is much more robust, as it tells the ASG to use the CLB's health check to determine if an Instance is healthy or not and to automatically replace Instances if the CLB reports them as unhealthy. That way, Instances will be replaced not only if they are completely down, but also if, for example, they've stopped serving requests because they ran out of memory or a critical process crashed.

One last thing to do before deploying the load balancer: let's add its DNS name as an output to outputs.tf so it's easier to test if things are working:

$ vi outputs.tfAnd add the following:

output "elb_dns_name" {

value = aws_elb.example.dns_name

description = "The domain name of the load balancer"

}Run terraform apply and read through the plan output. You should see that additionally to your original single EC2 Instance Terraform will create a launch configuration, ASG, ALB, and a security group. If the plan looks good, type in "yes" and hit enter. When apply completes, you should see the elb_dns_name output:

Outputs: (...) clb_dns_name = xxxx.us-east-1.elb.amazonaws.com

Copy this URL down. It'll take a couple minutes for the Instances to boot and show up as healthy in the CLB. In the meantime, you can inspect what you've deployed. Open up the ASG section of the EC2 console, and you should see that the ASG has been created

You may have noticed that when you run the terraform plan or terraform apply commands, Terraform was able to find the resources it created previously and update them accordingly. But how did Terraform know which resources it was supposed to manage?

The answer is that Terraform records information about what infrastructure it created in a Terraform state file. By default, when you run Terraform in the folder (in our case ~/terraform-demo/basic, Terraform creates the file ~/terraform-demo/basic/terraform.tfstate. This file contains a custom JSON format that records a mapping from the Terraform resources in your templates to the representation of those resources in the real world.

If you're using Terraform for a personal project, storing state in a local terraform.tfstate file works just fine. But if you want to use Terraform as a team on a real product, you run into several problems:

- Shared storage for state files: To be able to use Terraform to update your infrastructure, each of your team members needs access to the same Terraform state files. That means you need to store those files in a shared location.

- Locking state files: As soon as data is shared, you run into a new problem: locking. Without locking, if two team members are running Terraform at the same time, you may run into race conditions as multiple Terraform processes make concurrent updates to the state files, leading to conflicts, data loss, and state file corruption.

- Isolating state files: When making changes to your infrastructure, it's a best practice to isolate different environments. For example, when making a change in the staging environment, you want to be sure that you're not going to accidentally break production. But how can you isolate your changes if all of your infrastructure is defined in the same Terraform state file?

Shared storage for state files

The most common technique for allowing multiple team members to access a common set of files is to put them in version control (e.g. Git). With Terraform state, this is a Bad Idea for the following reasons:

- Manual error: It's too easy to forget to pull down the latest changes from version control before running Terraform or to push your latest changes to version control after running Terraform. It's just a matter of time before someone on your team runs Terraform with out-of-date state files and as a result, accidentally rolls back or duplicates previous deployments.

- Locking: Most version control systems do not provide any form of locking that would prevent two team members from running terraform apply on the same state file at the same time.

- Secrets: All data in Terraform state files is stored in plain text. This is a problem because certain Terraform resources need to store sensitive data. For example, if you use the aws_db_instance resource to create a database, Terraform will store the username and password for the database in a state file in plain text. Storing plain-text secrets anywhere is a bad idea, including version control.

Instead of using version control, the best way to manage shared storage for state files is to use Terraform's built-in support for remote backends. A Terraform backend determines how Terraform loads and stores state. The default backend is the local backend, which stores the state file on your local disk. Remote backends allow you to store the state file in a remote, shared store. A number of remote backends are supported, including Amazon S3, Azure Storage, Google Cloud Storage, and HashiCorp's Terraform Pro and Terraform Enterprise.

Remote backends solve all three of the issues listed above:

- Manual error: Once you configure a remote backend, Terraform will automatically load the state file from that backend every time you run

planorapplyand it'll automatically store the state file in that backend after eachapply, so there's no chance of manual error. - Locking: Most of the remote backends natively support locking. To run

terraform apply, Terraform will automatically acquire a lock; if someone else is already running apply, they will already have the lock, and you will have to wait. You can run apply with the-lock-timeout=parameter to tell Terraform to wait up toTIMEfor a lock to be released (e.g.,-lock-timeout=10mwill wait for 10 minutes). - Secrets: Most of the remote backends natively support encryption in transit and encryption on disk of the state file. Moreover, those backends usually expose ways to configure access permissions (e.g., using IAM policies with an S3 bucket), so you can control who has access to your state files and the secrets the may contain.

If you're using Terraform with AWS, Amazon S3 (Simple Storage Service), which is Amazon's managed file store, is typically your best bet as a remote backend for the following reasons:

- It supports encryption, which reduces worries about storing sensitive data in state files.

- It supports locking via DynamoDB. More on this below.

- It supports versioning, so every revision of your state file is stored, and you can roll back to an older version if something goes wrong.

- It's inexpensive, with most Terraform usage easily fitting into the free tier.

To enable remote state storage with S3, the first step is to create an S3 bucket. Create new backend directory and change to it:

$ mkdir -p ~/terraform-demo/backend

$ cd ~/terraform-demo/backendCreate a main.tf file in this new folder:

$ vi main.tfAt the top of the file, specify AWS as the provider and bucket configuration by using the aws_s3_bucket resource:

provider "aws" {

region = "us-east-1"

}

resource "aws_s3_bucket" "terraform_state" {

bucket = "ita-terraform-state-${random_integer.ri.result}"

force_destroy = true

# Enable versioning so we can see the full revision history of our

# state files

versioning {

enabled = true

}

# Enable server-side encryption by default

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

}

resource "random_integer" "ri" {

min = 10000

max = 99999

}

output "bucket_name" {

value = aws_s3_bucket.terraform_state.bucket

description = "The the name of the bucket"

}This code sets three arguments:

bucket: This is the name of the S3 bucket. Note that S3 bucket names must be globally unique amongst all AWS customers. Therefore, It uses a random number generator resource so the Bucket name is unique with configured output so you can copy the result.versioning: This block enables versioning on the S3 bucket, so that every update to a file in the bucket actually creates a new version of that file. This allows you to see older versions of the file and revert to those older versions at any time.server_side_encryption_configuration: This block turns server-side encryption on by default for all data written to this S3 bucket. This ensures that your state files, and any secrets they may contain, are always encrypted on disk when stored in S3.

Next, you need to create a DynamoDB table to use for locking. To use DynamoDB for locking with Terraform, you must create a DynamoDB table that has a primary key called LockID (with this exact spelling and capitalization!). You can create such a table using the aws_dynamodb_table resource:

resource "aws_dynamodb_table" "terraform_locks" {

name = "terraform-lock-${random_integer.ri.result}"

billing_mode = "PAY_PER_REQUEST"

hash_key = "LockID"

attribute {

name = "LockID"

type = "S"

}

}

output "dynamo_table" {

value = aws_dynamodb_table.terraform_locks.name

description = "The the name of the DynamoDb table"

}Run terraform init to download the provider code and then run terraform apply to deploy. Once everything is deployed, you will have an S3 bucket and DynamoDB table, but your Terraform state will still be stored locally. To configure Terraform to store the state in your S3 bucket (with encryption and locking), you need to add a backend configuration to your Terraform code. This is configuration for Terraform itself, so it lives within a terraform block, and has the following syntax:

terraform {

backend "<BACKEND_NAME>" {

[CONFIG...]

}

}Where BACKEND_NAME is the name of the backend you want to use (e.g., "s3") and CONFIG consists consists of one or more arguments that are specific to that backend (e.g., the name of the S3 bucket to use).

Change back to basic directory:

$ cd ~/terraform-demo/basicAdd the backend configuration for an S3 backend to main.tf:

terraform {

backend "s3" {

# Replace this with your bucket name!

bucket = "ita-terraform-state-RANDOM_INTEGER"

key = "global/s3/terraform.tfstate"

region = "us-east-1"

# Replace this with your DynamoDB table name!

dynamodb_table = "terraform-lock-RANDOM_INTEGER"

encrypt = true

}

}Let's go through these settings one at a time:

bucket: The name of the S3 bucket to use. Make sure to replace this with the name of the S3 bucket you created earlier.key: The file path within the S3 bucket where the Terraform state file should be written.region: The AWS region where the S3 bucket lives.dynamodb_table: The DynamoDB table to use for locking. Make sure to replace this with the name of the DynamoDB table you created earlier.encrypt: Setting this to true ensures your Terraform state will be encrypted on disk when stored in S3. We already enabled default encryption in the S3 bucket itself, so this is here as a second layer to ensure that the data is always encrypted.

To tell Terraform to store your state file in this S3 bucket, you're going to use the terraform init command again. This little command can not only download provider code, but also configure your Terraform backend. Moreover, the init command is idempotent, so it's safe to run it over and over again:

$ terraform init

Initializing the backend...

Do you want to copy existing state to the new backend?

Pre-existing state was found while migrating the previous "local" backend to the

newly configured "s3" backend. No existing state was found in the newly

configured "s3" backend. Do you want to copy this state to the new "s3"

backend? Enter "yes" to copy and "no" to start with an empty state.

Enter a value:Terraform will automatically detect that you already have a state file locally and prompt you to copy it to the new S3 backend. If you type in "yes," you should see:

Successfully configured the backend "s3"! Terraform will automatically

use this backend unless the backend configuration changes.After running this command, your Terraform state will be stored in the S3 bucket. You can check this by heading over to the S3 console in your browser and clicking your bucket.

With this backend enabled, Terraform will automatically pull the latest state from this S3 bucket before running a command, and automatically push the latest state to the S3 bucket after running a command.

When you're done experimenting with Terraform, it's a good idea to remove all the resources you created so AWS doesn't charge you for them. Since Terraform keeps track of what resources you created, cleanup is simple. All you need to do is run the destroy command:

$ cd ~/terraform-demo/basic

$ terraform destroy

(...)

Terraform will perform the following actions:

# aws_autoscaling_group.example will be destroyed

- resource "aws_autoscaling_group" "example" {

(...)

}

# aws_launch_configuration.example will be destroyed

- resource "aws_launch_configuration" "example" {

(...)

}

# aws_lb.example will be destroyed

- resource "aws_lb" "example" {

(...)

}

(...)

Plan: 0 to add, 0 to change, 8 to destroy.

Do you really want to destroy all resources?

Terraform will destroy all your managed infrastructure, as shown

above. There is no undo. Only 'yes' will be accepted to confirm.

Enter a value:Once you type in "yes" and hit enter, Terraform will build the dependency graph and delete all the resources in the right order, using as much parallelism as possible. In about a minute, your AWS account should be clean again.

Next delete the S3 Bucket and DynamoDB table:

$ cd ~/terraform-demo/backend

$ terraform destroy

(...)Finally, remove the autocomplete plugin and code:

$ rm -rf ~/terraform-demo

$ rm -rf ~/.vim/pack/plugins/start/vim-terraformThank you! :)